Lab 1

Lab 2

Lab 3

Lab 4

Lab 5

Lab 6

Lab 7

Lab 8

Lab 9

Lab 10

Lab 11

Lab 12

Lab 1

Goal

The purpose of this lab was to get acquainted with the SparkFun Artemis Nano and with our custom Ubuntu VM.

Materials

- 1 SparkFun Artemis RedBoard Nano

- 1 USB A-C cable

- 1 lithium-polymer rechargeable battery

- 1 laptop

Procedure

The IDE

Installed Arduino from the Arch Linux repos. Only the IDE supports Artemis boards. arduino-avr-core is required for the IDE.

Installed the Arduino core in the IDE:

- Open Tools > Board > Boards Manager in the IDE

- Search for apollo3

- Choose version 1.1.2 in the drop-down

- Click Install (this took several minutes)

Chose the “SparkFun RedBoard Artemis Nano” as the board.

Testing

Uploaded four example sketches to test various parts of the board:

- Blink (from built-in examples)

- Example2_Serial (from SparkFun Apollo3 examples)

- Example4_analogRead (SparkFun)

- Example1_Microphone (SparkFun)

Also modified Example1_Microphone to blink the built-in LED when whistling.

Results

IDE

One pitfall was accessing the COM port, as Linux doesn’t give users read-write permission by default. The permanent fix was a custom udev rule:

SUBSYSTEM=="tty" ATTRS{vendor}=="0x8086" ATTRS{subsystem_device}=="0x0740" MODE="0666"

Note that the exact requirements for this rule depend on the computer and the distro.

Testing

The Blink example worked, blinking the blue LED labeled 19.

The Serial example both input and output text via the USB serial connection.

The analogRead sketch read temperature values which noticeably rose as I held my warm thumb against the device.

The Microphone example showed that the loudest frequency doubled when I whistled an octave, indicating that the microphone is working well.

I added two pieces of code to the Microphone example to make it blink the LED when I whistle.

In void setup():

pinMode(LED_BUILTIN,OUTPUT);

In void loop():

if(ui32LoudestFrequency>800 && ui32LoudestFrequency<2000)

digitalWrite(LED_BUILTIN,HIGH); // blink the LED when frequency is in whistling range

else

digitalWrite(LED_BUILTIN,LOW); // and not otherwise

This worked well. (It also picked up my squeaky chair or tapping on my desk.)

While the Artemis Nano was plugged into my computer, plugging the battery in lit the yellow CHG light.

I commented out all the serial lines of code so that the board would not attempt to establish serial communication with my computer. Then, the board would recognize my whistle on battery too.

Lab 2

Goal

Materials

- 1 Artemis Nano

- 1 USB dongle

- 1 USB A-C cable

- 1 computer running the Ubuntu 18 VM

Procedure

Setup

Downloaded the distribution code.

(Re)installed bleak using pip install bleak at the command line.

Downloaded the sketch ECE_4960_robot to the Artemis and opened the serial monitor at 115200 baud.

Entered the folder generated by extracting the ZIP. Ran ./main.py twice while the Artemis Nano was powered on to discover the board. Manually cached the Artemis’ MAC address to settings.py.

Ping Test

Commented out pass and uncommented # await theRobot.ping() under async def myRobot.Tasks() in main.py. Ran ./main.py again while the Arduino serial monitor was still open. Copied the data from the terminal; pasted into a spreadsheet and set space as the delimiter; generated statistics.

Request Float

Modified case REQ_FLOAT in the Arduino sketch ECE_4960_robot.ino as follows:

case REQ_FLOAT:

Serial.println("Going to send a float");

res_cmd->command_type = GIVE_FLOAT;

res_cmd->length = 6;

((float *) res_cmd->data)[0] = (float) 2.71828; // send e

amdtpsSendData((uint8_t *)res_cmd, res_cmd->length);

break;

In main.py, commented await theRobot.ping() and uncommented await theRobot.sendCommand(Commands.REQ_FLOAT). Reran main.py and received the floating-point value of e.

Bytestream Test

In main.py, commented await theRobot.sendCommand(Commands.REQ_FLOAT) and uncommented await theRobot.testByteStream(25). Added the following code to ECE_4960_robot.ino within if (bytestream_active):

Serial.printf("Stream %d after %3.1f ms latency\n", bytestream_active, (micros() - finish)*0.001);

int numInts = 3; // how many integers will fit in this stream

bytestreamCount++; // we are sending one bytestream now

res_cmd->command_type = BYTESTREAM_TX;

// Two bytes for command_type and for length;

res_cmd->length=2+bytestream_active*numInts; // then 4 bytes for each uint32_t and 8 for each uint64_t

start=micros();

uint32_t integer32 = 0xffffffff; // different values to indicate whether we're getting a 32-bit

uint64_t integer64 = 0xffffffffffffffff; // or a 64-bit number

uint32_t *p32; // pointers to the appropriate data types

uint64_t *p64;

switch(bytestream_active){

case 4: //asked for a 4-byte number!

p32=(uint32_t*) res_cmd->data;

for(int i=0; i<numInts-2; i++){ // the -2 is so the last two values can be the time and the count

memcpy(p32, &integer32, sizeof(integer32));

p32++; // move 4 bits down the array

}

memcpy(p32, &bytestreamCount, sizeof(bytestreamCount));

p32++;

memcpy(p32, &start, sizeof(start));

break;

case 8: //asked for an 8-byte number!

default: // the default is to send a 64-bit (8-byte) array

p64=(uint64_t*) res_cmd->data;

for(int i=0; i<numInts-2; i++){

memcpy(p64, &integer64, sizeof(integer64));

p64++; // move 8 bits down the array

}

bytestreamCount64 = (uint64_t) bytestreamCount;

memcpy(p64, &bytestreamCount64, sizeof(bytestreamCount));

p64++;

memcpy(p64, &start, sizeof(start));

break;

}

amdtpsSendData((uint8_t *)res_cmd, res_cmd->length);

finish=micros();

Serial.printf("Finished sending bytestream after %u microseconds\n",finish-start);

where start and finish were defined as global variables and finish was initialized to zero.

Repurposed bytestream_active to tell the Artemis how large an integer to send; if bytestream_active==4 then the Artemis sends back an array of 32-bit (4-byte) integers, and if bytestream_active==8 then the Artemis sends back 64-bit integers. These maximum values were confirmed by setting integer32=0xffffffff and integer64=0xffffffffffffffff as the maximum values that can be sent. Because of this usage, changed await theRobot.testByteStream(25) to await theRobot.testByteStream(4) in the 32-bit case or await theRobot.testByteStream(8) for the 64-bit case.

Added the following code to main.py within if code==Commands.BYTESTREAM_TX.value:

# dataUP = unpack("<III",data) # for 3 32-bit numbers

dataUP = unpack("<QQQ", data) # for 3 64-bit numbers

# print(f"Received {length} bytes of data")

print(dataUP)

Results

Ping Test

The Bluetooth icon in the statusbar does not appear until main.py is run. This indicates that the Python script, rather than the Ubuntu Bluetooth wizard, is establishing the connection.

The ping latency followed this histogram:

Using the setting "OutputRawData": True in settings.py showed me that returning a ping sends 96 bytes of data, all zeroes. Thus, the transfer rate followed this histogram:

Most of the “rate” in this case is the latency of returning pings. From the Artemis side, I measured the average time between pings as 160.0 ms. The average total RTT as measured by the Python script was 159.3 ms. The Artemis sends data very quickly.

Request Float

The traceback at the bottom occurred because I stopped the program with Ctrl+C.

Note that although the floating-point value was 2.71828, there were additional random digits. This means that floats cannot be compared like if( float1 == float2 ), but rather if( abs(float1-float2) < tolerance ).

Bytestream Test

32-bit Stream

The Python script indicates that it receives 14 bytes of data in each packet. The mean transfer rate is 963 bytes/second. Note this is the average over the packets which were received; only 65% of the packets made it to the computer.

64-bit Stream

The Python script indicates that it receives 26 bytes of data in each packet. The mean transfer rate is 1.46 KB/second. Note this is the average over the packets which were received; only 22% of the packets made it to the computer.

Lessons Learned

As I experienced, and as this SuperUser post points out, users must be part of the vboxusers group on the host machine, or else no USB devices are accessible from VirtualBox. This and other issues were solved when I did the following, in order:

- Add my user to the host machine’s

vboxusersgroup. - In the VirtualBox settings, ensure

Enable USB Controlleris checked. - Connect the Bluetooth USB dongle.

- Start the VM.

- In the VirtualBox menu bar, mouse to Devices > USB and check

Broadcom Corp 20702A0. - Run

main.pyin the CLI.

Adjusting the Ubuntu Bluetooth settings was unnecessary and caused issues. The USB dongle is more reliable than my built-in BLE radio.

Another surprise I shouldn’t have experienced was that the code didn’t run when I executed python main.py. I noted that the file was executable and began with #!/usr/bin/env python3. When I ran it as a script, it worked perfectly. python was mapped to python2.7 and not python3. Always check versions.

I found that the Python code “cares” what length is sent with the message. The length must match the format string; and the code stops parsing data after length bytes.

Lastly, the shorter the data packets were, the more likely they were to reach their destination.

See my code and data for Lab 2 here.

Lab 3

Materials Used

- Fancy RC Amphibious Stunt car with remote

- 2 NiCd rechargeable batteries with USB charger

- 2 AA batteries

- Screwdriver

- Ruler / measuring tape

- Timer (app)

- GPS (app)

- Laptop running Ubuntu 18.04 VM with ROS Melodic Morenia

Procedure

Physical Robot

Charged each NiCd battery about 8-9 hours before first use (or until the red light on the USB charger stopped flashing.) Inserted NiCd battery into robot and two rechargeable AA batteries into the remote.

Collected various measurements using the available environment, a ruler, and a timer. For clocking speed, used a GPS app to get distance measurements.

Simulation

Installed ros-melodic-rosmon.

Downloaded the lab 3 base code from Box into the folder shared between the host and the VM. Started the VM; extracted the archive; entered the folder extracted from the archive; and ran ./setup.sh.

Closed and reopened the terminal emulator, per the instructions.

Started lab3-manager, which was now aliased as shown here. Hit a to enter the Node Actions menu; then hit s to start a simulator.

Opened another terminal window and ran robot-keyboard-teleop to allow me to control the simulation.

Played the game and made the measurements below.

Results and Lessons Learned

Physical Robot

This video is courtesy of my apartment-mate Ben Hopkins.

The wheelbase is 4 in = 10.5 cm wide and 3.25 in = 8 cm long (measuring from the center of the wheels.) This measurement is useful to determine skid-steering quality.

Unless stated otherwise, the following tests were done on a flat surface.

Manual Control

How difficult it is to drive the robot manually helps me understand the difficulty of controlling it algorithmically.

- The three speed settings had significantly different effects. It was virtually impossible to flip when traveling slow; but at max speed I could hardly start without flipping. The top speed and the default speed were nearly the same.

- The robot can turn in place quite predictably; however, it requires a lot of power to skid the wheels, and when the battery is low, it can no longer turn in place.

- Long arc turns are difficult not to do. I could hardly drive straight even if the joysticks were maxed out. In code, driving straight will require a feedback loop.

Inertial Measurements

How quickly the robot accelerates and decelerates tells me a lot about the robot’s power-to-weight ratio. I don’t have a scale, but I can calculate its mass (at least in terms of its motor torque.)

- Acceleration: 6-8 feet to get to full speed. 6-8 feet to coast to a stop. 2-2.5 seconds to accelerate to full speed.

- Can stop quickly by reversing with slow button (2 feet)

- These numbers stayed relatively constant as the battery drained; but the maximum speed decreased.

- Average max speed at full (ish) battery: 12 ft/s = 3.5 m/s = 8 mi/h

- These measurements were the same whether the robot drove forward or backward.

Gravitational Measurements

OK, so I know the robot’s motors are strong enough to accelerate and decelerate it quickly. How strong is that? Where is the weight in the robot?

- The robot is quite stable – it has to tilt around 75° before it flips.

- The robot is also somewhat topheavy - it flips if it accelerates or stops too suddenly.

- The motors were not very strong – it was sometimes difficult to climb hills. It could climb a 45° slope with a good battery, but it slowed significantly. Rough terrain also slowed it down since it had to climb over many short slopes.

Frictional Measurements

- The robot turned about its center predictably on a flat surface, but slopes made a big difference.

- The robot’s turn radius was predictable at a given battery level, speed, and type of terrain. Little rocks, initial speed, battery level, and slope all made a big difference.

- Average maximum rotational speed when turning in place: 75 rpm = 7.9 rad/s

- I noticed no differences between driving on flat (hard) carpet, concrete, and asphalt.

Other Measurements

- The range was quite good, 250+ feet line-of-sight.

- I could drive the robot around and under cars. The signal can go around at least 10 cars in a parking lot.

- On the first and second runs, the battery lasted 35 min (when the robot was moving about 1/2 the time.)

Simulation

- The robot doesn’t seem to have a minimum speed. I can reduce the input speed to 0.01 and less and still it moves (albeit around 1 square per minute.) It seems to have no maximum speed either.

- The same is true for angular speed.

- I could accelerate nearly instantly and reverse direction nearly instantly; the only limiting factor seems to be the frame rate. The time between 1x reverse and 1x forward is the same as between 3x reverse and 3x forward, or 3x forward and 3x reverse, etc.

- When I hit the wall, the robot did not bounce, flip, etc; the simulation paused and showed a warning triangle sign in the place of the robot until I backed away from the wall.

- I could grab and drag the robot using the mouse; as soon as I put the robot down, it resumed motion in the same direction as it was moving before. However, I couldn’t both drag/pan and steer at once; the keyboard control only works when the terminal window running

robot-keyboard-teleophas focus.

Click here to see my data for Lab 3.

Lab 4

Materials Used

- Sparkfun Artemis RedBoard Nano

- USB A-C cable

- SparkFun Serial Controlled Motor Driver (SCMD) module (with no jumpers soldered)

- SparkFun Qwiic (I²C) connector cable

- Fancy RC Amphibious Stunt Car

- 4.8V NiCd rechargeable battery

- 3.7V Li-ion rechargeable battery

Tools and Software

- Laptop with Ubuntu 18.04 VM and Arduino IDE

- Wire cutters (wire stripper would be ideal; scissors also work)

- Small Phillips-head screwdriver (optional)

- Small flat-head screwdriver (required for clamping wires in SCMD)

- Electrical tape

- Duct tape (optional)

Procedure

Physical Robot

Reviewed documentation. Removed the aesthetic cover and the motor cover; cut the wires to the motors right at the connectors; stripped about 3/8” of insulation; and connected them to the SCMD. Cut the battery wires close to the control board, stripped the ends, and connected them to the SCMD.

In Arduino IDE, installed the Serial Controlled Motor Driver library version 1.0.4 (Tools -> Manage Libraries -> search for “SCMD”.) Loaded Example1_Wire (from the newly installed SCMD library); changed the I²C device address to 0x5D; and ran it. It spun the two wheel motors. Used this command: Serial.printf("Address %d\n",i); within the for loop to tell me what the motor addresses were. Wrote code to spin the motors and found the minimum speed that made the wheels turn.

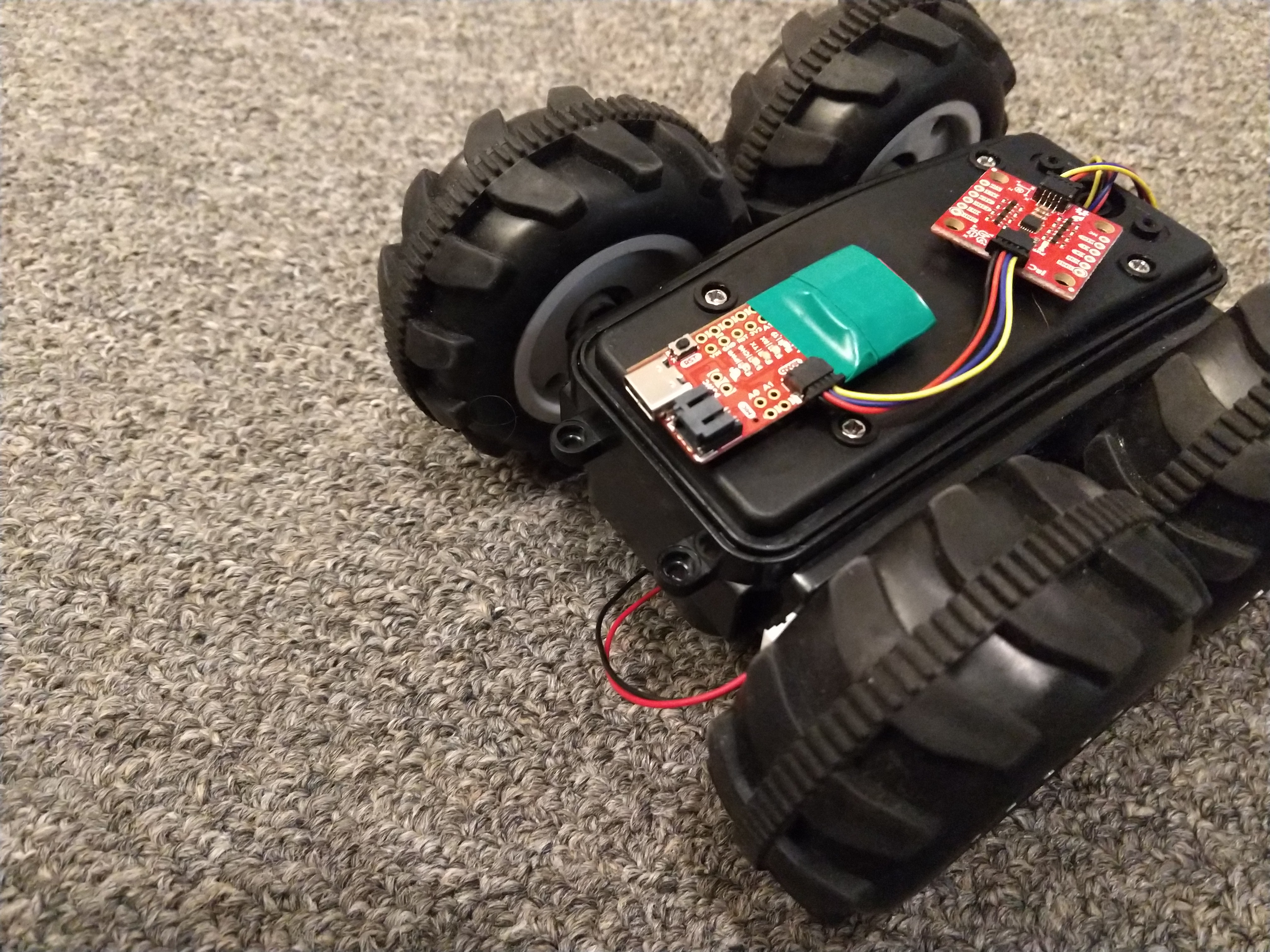

Removed the RC control board from the robot; pushed the SCMD into its place; and reattached the top cover. The Qwiic wire came out between the cover and the case, like this:

Wrote code to make the robot drive in a straight line (using a linear scaling calibration) and took a video.

Simulation

Downloaded the Lab 4 Base Code from Box into the folder shared between the host and the VM. In the VM, opened a terminal window; entered the shared folder; extracted the base code; and ran setup.sh in the appropriate folder.

Closed and reopened terminal window. Ran lab4-manager and followed the instructions to open a simulator window.

Opened another terminal window (Ctrl+Alt+T). Entered the directory /home/artemis/catkin_ws/src/lab4/scripts and ran jupyter lab.

In the resulting Firefox window, opened the Jupyter notebook lab4.ipynb. Followed the instructions and saved the lab notebook.

Results and Notes

Physical Robot

Running the example code, when the motor driver was plugged in, gave the result

I2C device found at address 0x5D !

Not surprisingly, the addresses of the wheel motors were 0 and 1 (since the SCMD drives up to two motors.)

I could not fit the Qwiic connector through the start button hole.

The minimum power at which I could make the wheels spin was not the same on either side. On the left (address 0) it was about 46; on the right (address 1) it was about 50. These numbers seem to change as the battery level changes.

I reasoned that the friction in the wheels is a constant force, not a velocity-dependent one, so the frictional resistance should be a constant offset. This seemed to to work; at low power, I needed +4 power on the right side, and the same should be true at higher power levels. However, the wheels were not exactly the same size, as evidenced by the fact that the robot drove in a curve. By trial and error, I scaled the left side up by 8% to compensate for this, and then the robot drove straighter.

When I assembled the robot, I found that the electrical tape wasn’t sticky enough to hold the Artemis Nano onto the robot case; and zip ties would prevent me from replacing the battery. So I used duct tape instead.

Below is a video of a straight line (triggered by a whistle).

Also, note that I could not set to recognize higher-frequency whistles, or else the robot would pick up the sound of its own motors and keep on driving.

The next two videos show two of my attempts to make it drive in a triangle, using the following code (with different wait times):

for(int i=0; i<3; i++){

myMotorDriver.setDrive( right, 1, power); // 1 for forward

myMotorDriver.setDrive( left, 0, calib*power+offset); // left side is reversed

int t = millis();

while (millis()-t < 350){ // Wait for a specified time, but keep updating the PDM data

myPDM.getData(pdmDataBuffer, pdmDataBufferSize);

}

// Coast to a stop

myMotorDriver.setDrive( left, 1, 0); // Set both sides to zero

myMotorDriver.setDrive( right, 1, 0);

t = millis();

while (millis()-t < 500){ // Wait for a specified time, but keep updating the PDM data

myPDM.getData(pdmDataBuffer, pdmDataBufferSize);

}

// Spin in place

myMotorDriver.setDrive( right, 1, power); // 1 for forward

myMotorDriver.setDrive( left, 1, calib*power+offset); // left side is NOT reversed since we're spinning!

t = millis();

while (millis()-t < 290){ // Wait for a specified time, but keep updating the PDM data

myPDM.getData(pdmDataBuffer, pdmDataBufferSize);

}

// Coast to a stop

myMotorDriver.setDrive( left, 1, 0); // Set both sides to zero

myMotorDriver.setDrive( right, 1, 0);

t = millis();

while (millis()-t < 500){ // Wait for a specified time, but keep updating the PDM data

myPDM.getData(pdmDataBuffer, pdmDataBufferSize);

}

}

The open-loop control is very sensitive to

- Timing (the difference between the first and the second videos is 20 ms)

- Surfaces (hardly turns on carpet; spins easily on tile)

- Battery level (in less than a minute, the robot would no longer turn enough)

Simulation

artemis@artemis-VirtualBox:~$ cd Shared

artemis@artemis-VirtualBox:~/Shared$ ls

...

lab4_base_code.zip

...

artemis@artemis-VirtualBox:~/Shared$ unzip lab4_base_code.zip

Archive: lab4_base_code.zip

creating: lab4_base_code/

inflating: lab4_base_code/bash_aliases

inflating: lab4_base_code/setup.sh

inflating: lab4_base_code/lab4.zip

artemis@artemis-VirtualBox:~/Shared$ ls

...

lab4_base_code

lab4_base_code.zip

...

artemis@artemis-VirtualBox:~/Shared$ cd ./lab4_base_code/

artemis@artemis-VirtualBox:~/Shared/lab4_base_code$ ls

bash_aliases lab4.zip setup.sh

artemis@artemis-VirtualBox:~/Shared/lab4_base_code$ ./setup.sh

> Log output written to: /home/artemis/Shared/lab4_base_code/output_lab4.log

> Lab Work Directory: /home/artemis/catkin_ws/src/lab4/scripts/

Validating...

Step 1/3: Extracting Files to: /home/artemis/catkin_ws/src/

Step 2/3: Setting up commands

Step 3/3: Compiling Project

Successfully compiled lab.

NOTE: Make sure you close all terminals after this message.

artemis@artemis-VirtualBox:~/Shared/lab4_base_code$ exit

My working open-loop square was as follows:

# Your code goes here

for i in range(4):

robot.set_vel(1,0)

time.sleep(0.5)

robot.set_vel(0,1)

time.sleep(1.625)

robot.set_vel(0,0)

It was easy to tune the timing so that the robot predictably drove in a perfect square, like this:

See the rest of my code, and the Jupyter notebook, here. Also see additional things I learned and notes in the Other Lessons Learned page.

Lab 5

Background

Materials

Components

- SparkFun Artemis RedBoard Nano

- USB A-C cable

- SparkFun 4m time-of-flight sensor (VL53L1X)

- SparkFun 20cm proximity sensor (VCNL4040)

- SparkFun Serial Controlled Motor Driver (SCMD) module (with no jumpers soldered)

- SparkFun Qwiic (I²C) connector cable

- Fancy RC Amphibious Stunt Car

- 4.8V NiCd rechargeable battery

- 3.7V Li-ion rechargeable battery

- Boxes and targets of various sizes

Tools and Software

- Laptop with Ubuntu 18.04 VM and Arduino IDE

- Wire cutters (scissors also work)

- Small Phillips-head screwdriver (may substitute flathead)

- Ruler (or printed graph paper)

- Gray target (printed; 17% dark)

- Electrical tape

- Double-sided tape or sticky pad

Prelab

The two sensors are quite different. The long-range ToF sensor (VL53L1X) has a field of view of about 15 degrees, and it has a much longer range. It can safely be put near the ground without seeing the ground; it could miss obstacles right in front of the wheels since the detection cone is very narrow near the robot. Outside of its detection cone, the prox sensor (VCNL4040) has a wider field of view (±20° = 40°) so it may be placed right at the center of the robot and hopefully it will pick up the obstacles close and to the side, which would be missed by the VL53L1X. Also note that the VCNL4040 maxes out within the range of the VL53L1X, so there is some overlap where the two sensors’ readings may be compared.

These two distance sensors exemplify the main types of infrared ranging sensors.

- Infrared intensity measurement: This uses the inverse-square law to determine the distance to an object. However, the coefficient of the inverse-square law varies widely with color, and this intensity is also extremely sensitive to ambient light. The VCNL4040 uses intensity measurement.

- Infrared time-of-flight (ToF): This works like RADAR, by measuring the elapsed time for the optical signal to bounce back. It is more immune to noise than intensity measurement, but it is still vulnerable to interference from ambient light.

- Infrared angle measurement: This uses the angle (sometimes in conjunction with ToF) of the returned light to triangulate the distance. It eliminates some more of the problems of ToF, but is again sensitive to ambient light and refraction / angle of the reflector. The VL53L1X uses both angle and ToF measurement.

Other available ranging sensors include SONAR (used in submarines and bats ☺) and RADAR (used in aircraft.) Since the width of a diffracted beam decreases with increasing wavelength, both of these require some sort of beam-steering to get a sufficiently narrow beam to see only what’s in front of the robot. IR ranging is thus simpler and usually cheaper to use.

Procedure

Physical Robot

Skimmed the documentation for the VCNL4040 and the SparkFun repo and hookup guide. Noted that the default I²C address of the sensor is 0x60.

In the Arduino IDE, installed the SparkFun VCNL4040 Arduino Library using Tools > Manage Libraries.

Reviewed the datasheet and the manual for the VL53L1X.

Ran Example1_Wire (File > Examples > Wire) to find all I²C addresses. Ran Example4_AllReadings (File > Examples > SparkFun VCNL4040 Proximity Sensor Library) to measure data. Tested on four five different targets under various conditions. Recorded data once the reading stabilized (after about 5s.) When the data fluctuated wildly (e.g. at very close ranges) took the median and rounded.

In the Arduino IDE, installed the SparkFun VL53L1X Arduino Library using Tools > Manage Libraries. Ran Example1_Wire again to find the I²C address of the time-of-flight sensor. Tested ranging once and saw that it needed calibration. Calibrated using Example7_Calibration (File > Examples > SparkFun VL53L1X Distance Sensor). Adjusted offset accordingly in Example1_ReadDistance and tested ranging on several targets with the lights on and with the lights off.

Wrote and tested an obstacle-avoidance program.

Simulation

Downloaded and extracted the lab 5 base code. Entered the directory and ran setup.sh. Closed the terminal window.

Entered the directory ~/catkin_ws/src/lab5/scripts/. Ran jupyter lab; opened `lab5.ipynb’; followed the instructions in the Jupyter Notebook.

Results and Notes

Physical Robot

Unknown error at address 0x5D

Unknown error at address 0x5E

Unknown error at address 0x5F

I2C device found at address 0x60 !

Unknown error at address 0x61

Unknown error at address 0x62

Unknown error at address 0x63

The VCNL4040 indeed had the default I²C address of 0x60.

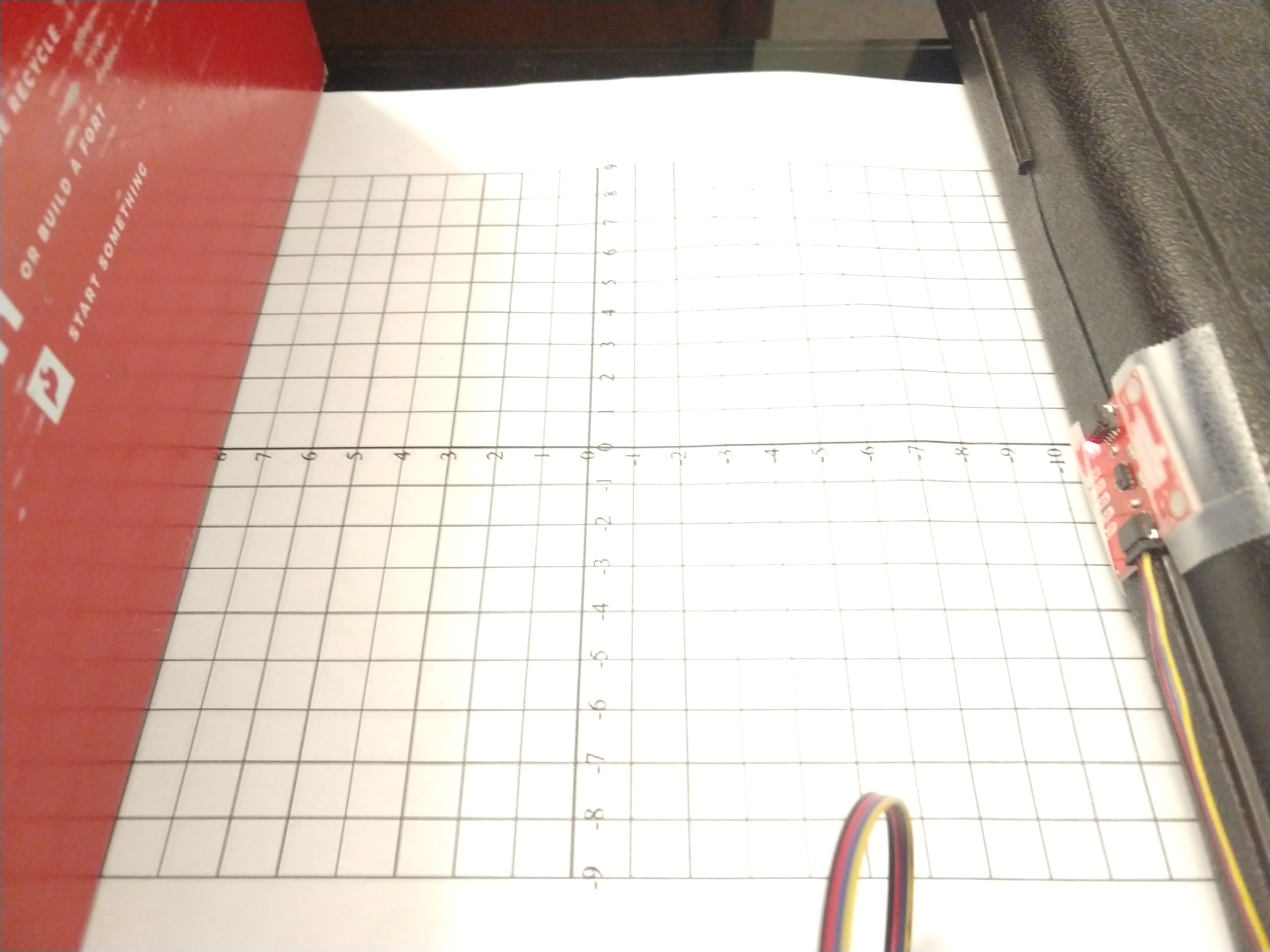

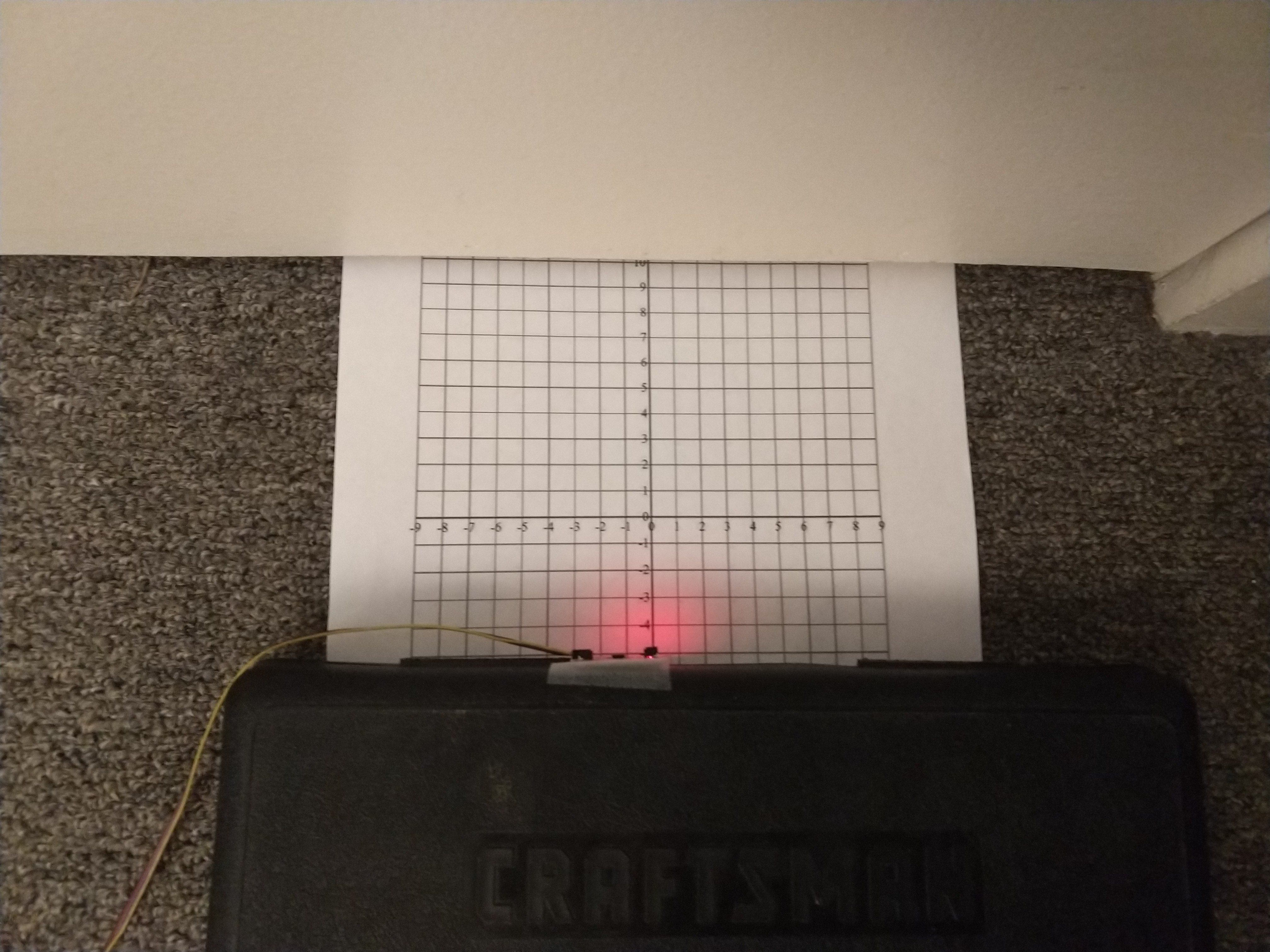

When testing the ranging for both sensors, I found it useful to tape the sensor to a fixed object, as suggested in the instructions. For me, it was a screwdriver case.

Figure 1. Ranging test with VCNL4040.

Figure 1. Ranging test with VCNL4040.

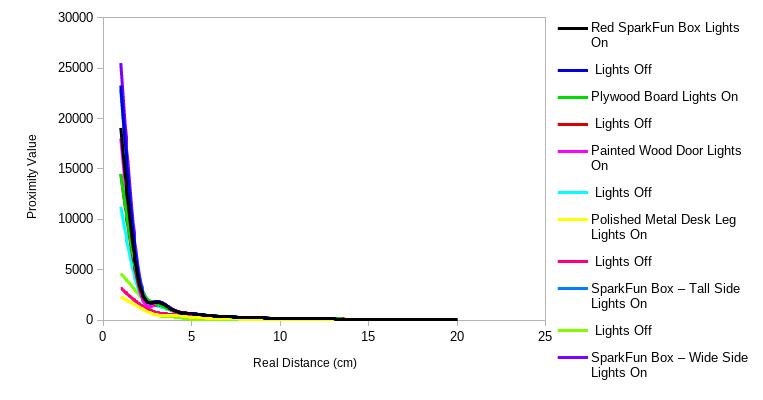

When running Example4_AllReadings, I noticed that the sensor readings took 3-5 seconds to stabilize. It seems like there is a rolling-average filter or an integrator in the sensor. I was also a bit surprised since the “prox reading” wasn’t a range estimate (like it is on ultrasonic rangefinders), but an intensity which scales with the inverse-square law. After gathering data from several surfaces, I found that my data looked much like the screenshot shown in the lab instructions.

I was pleasantly surprised that the VCNL4040 proximity ranges were about the same whether I had the lights on or off, and even if I shone a flashlight on the sensor. Both the shadow of the target and the red LED on the board significantly affected brightness readings as the sensor approached the target, so I recorded brightness at 20cm away where these had less effect.

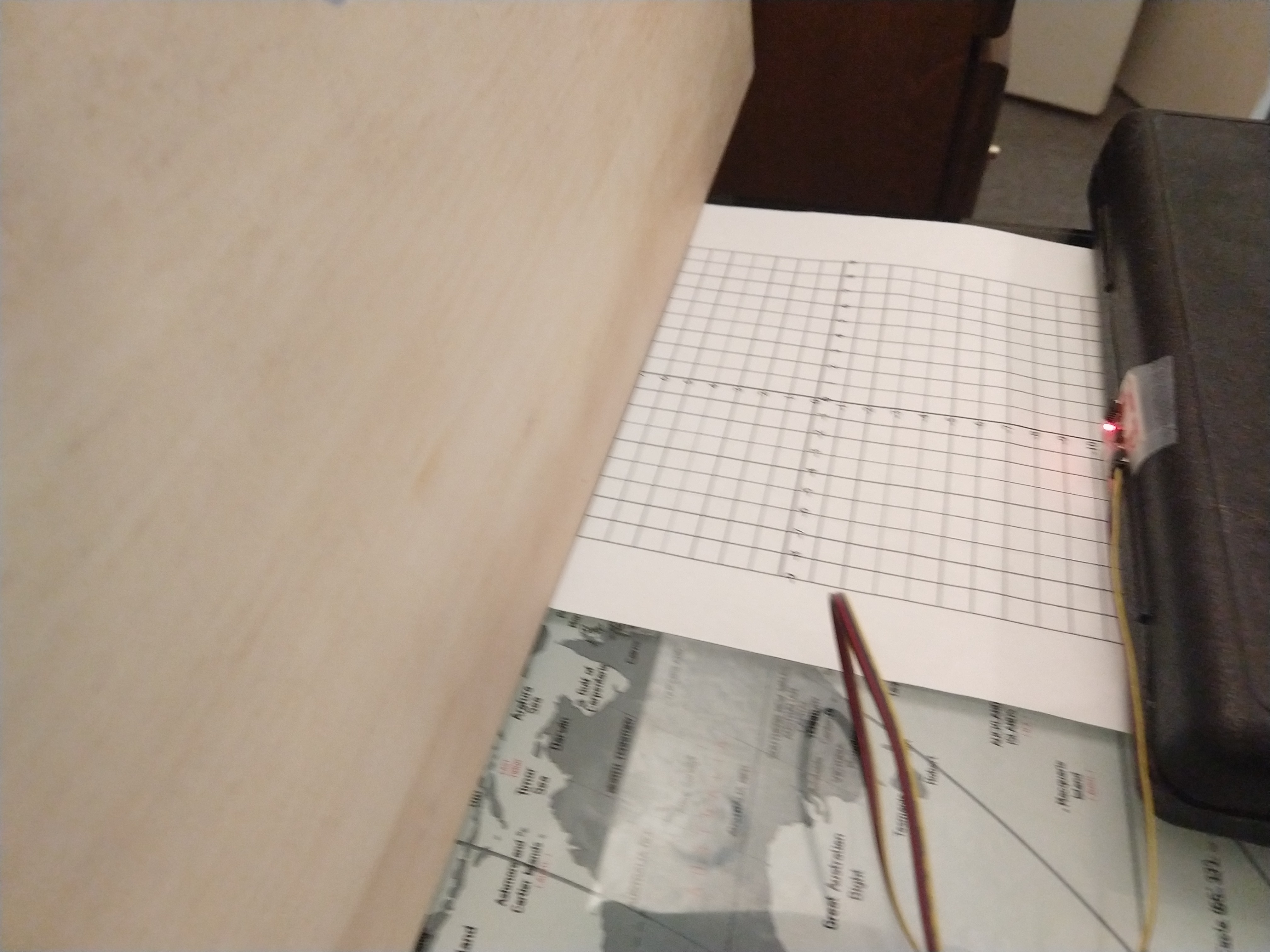

Figure 2. Ranging test with desk leg and VCNL4040.

Figure 2. Ranging test with desk leg and VCNL4040.

With the desk leg, I noticed that the readings were much worse than they were for other objects. At first I assumed it was the reflectivity of the surface, but I noted three other things:

- The graph paper was rumpled. Was the sensor seeing the paper instead? Turning the sensor sideways (so it completely missed the leg) gave approximately the same readings. Maybe it was seeing the paper.

- The reading varied significantly when the sensor moved side to side. Was it the width?

- The desk leg wasn’t parallel to the sensor, and I noticed that angles mattered in the case of the plywood too. But, holding the sensor off the ground and pointing it directly at the angled leg didn’t make much difference.

I repeated a few measurements of the desk leg with a ruler instead of the graph paper, and achieved the same results.

I also added an additional measurement of the box on its side, ruling out the possibility that it was the object’s width and not its surface texture.

Figure 3. Prox data vs. real range.

Figure 3. Prox data vs. real range.

As the SparkFun hookup guide predicted, the I²C address of the ToF sensor was 0x29.

Unknown error at address 0x26

Unknown error at address 0x27

Unknown error at address 0x28

I2C device found at address 0x29 !

Unknown error at address 0x2A

Unknown error at address 0x2B

Unknown error at address 0x2C

My first reading at 20cm distance showed 140cm instead, so I tried to run the Example7_Calibration. However, this code didn’t work. I traced the problem to the line while (!distanceSensor.checkForDataReady()), as the loop kept running forever and the sensor was never ready. Comparing this example code to Example1_ReadDistance (which worked) I noticed that distanceSensor.startRanging() had never been called. Adding this line to the example gave me successful (and repeatable) calibration.

*****************************************************************************************************

Offset calibration

Place a light grey (17 % gray) target at a distance of 140mm in front of the VL53L1X sensor.

The calibration will start 5 seconds after a distance below 10 cm was detected for 1 second.

Use the resulting offset distance as parameter for the setOffset() function called after begin().

*****************************************************************************************************

Sensor online!

Distance below 10cm detected for more than a second, start offset calibration in 5 seconds

Result of offset calibration. RealDistance - MeasuredDistance=37 mm

Then, I slightly modified the example distance-reading code as follows:

In void setup(), added a line distanceSensor.setOffset(37);

In void loop(), added code to time each range measurement and to print the time to the serial output.

When I tried different timing budgets, I didn’t see much improvement in precision when using timing budgets over 60 ms in short-range mode or over 180 ms in long-range mode. These maximum timing budgets seemed to be the best compromise between accuracy and speed; adding more doesn’t gain much accuracy, and reducing more doesn’t gain much speed.

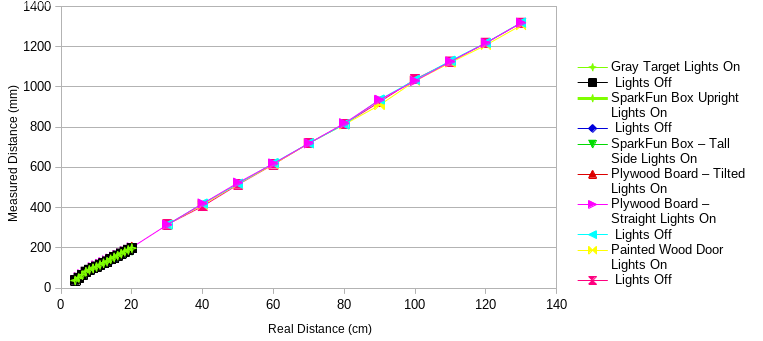

I noticed that, as the datasheet said, the ranging in ambient light was significantly more precise when I used short mode. The same seemed to be true in the dark, however. My data, plotted below, shows these trends. Note that some “waves” in the graphs are repeated for all surfaces.

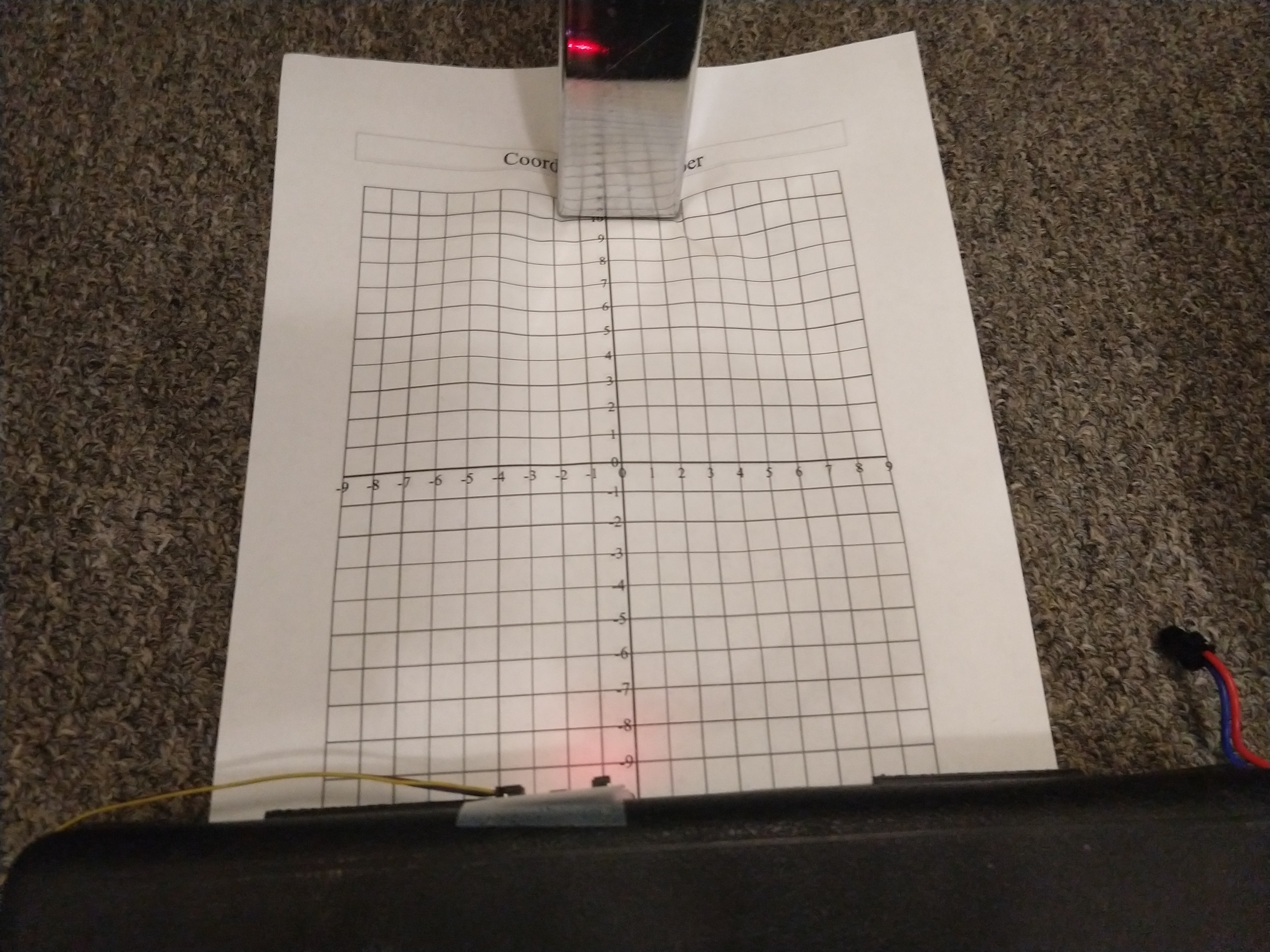

Figure 3. Ranging setup with plywood.

Figure 3. Ranging setup with plywood.

The ToF sensor was much less sensitive to the width of the object being detected; it made no difference what the orientation of the box was. It was somewhat sensitive to the angle of the plywood board, but only at short distances (where the VCNL4040 might be a better choice.)

Figure 4. Ranging setup with door (using graph paper for 20cm or less). Note: used measuring tape and taller box for long-range testing.

Figure 4. Ranging setup with door (using graph paper for 20cm or less). Note: used measuring tape and taller box for long-range testing.

Figure 5. Measured range (short range mode) vs. real range.

Figure 5. Measured range (short range mode) vs. real range.

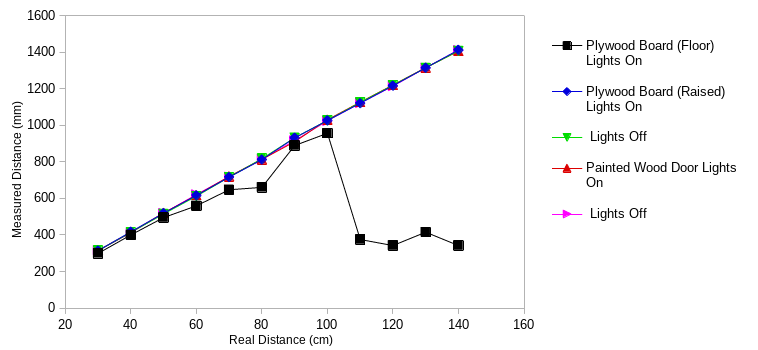

In the long-range case, my (mini) tape measure was only 1 m long, so I added an 18” ruler at the end of the tape measure to reach 1.4 m. The ToF sensor was surprisingly immune to small changes in angle (probably because the beam spans about 15°); but, when mounted low, it kept picking up the rough carpet, leading to noisy and very inaccurate measurements. To mount the sensor higher, I taped it to the box of the RC car. That is why there is a series which dips back down, and another series labeled “raised”.

The VL53L1X ToF sensor didn’t seem to see the metal desk leg at all. Hence, I did not gather range data for that. This will obviously cause bloopers when the robot cannot see shiny metal objects.

Figure 6. Measured range (long range mode) vs. real range.

Figure 6. Measured range (long range mode) vs. real range.

Unfortunately, the SparkFun library doesn’t have a function to output the signal or the sigma values. It can only set the thresholds. By default, these are

Signal intensity threshold: 0.0

Standard deviation threshold: 0.0

This seems to mean that there is no threshold. (I tried showing more decimal places and they were also zeros.)

I also didn’t notice much change in accuracy when I increased or decreased the time between measurements (at least not during manual testing.) By default, it is

100 ms between measurements.

When I moved the ToF sensor back and forth suddenly, it still gave consistent readings as fast as I could move it. Without knowing what typical standard deviations and signal intensities were, I didn’t have data to improve the values. So, I left these values at their defaults.

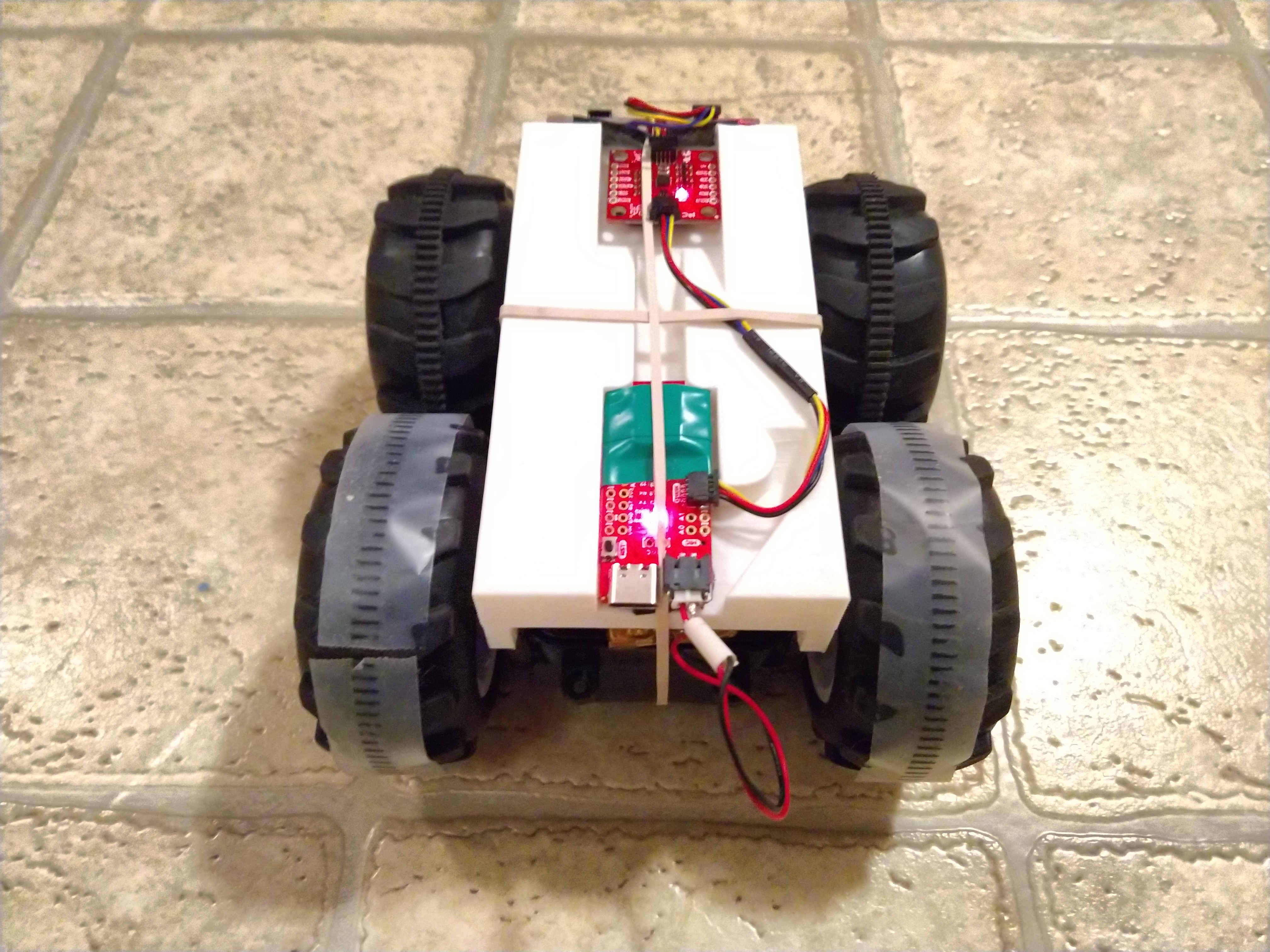

Update on Robot Construction

The wires now fit through the hole; I used the Qwiic-to-serial connectors and fed the pins through one at a time.

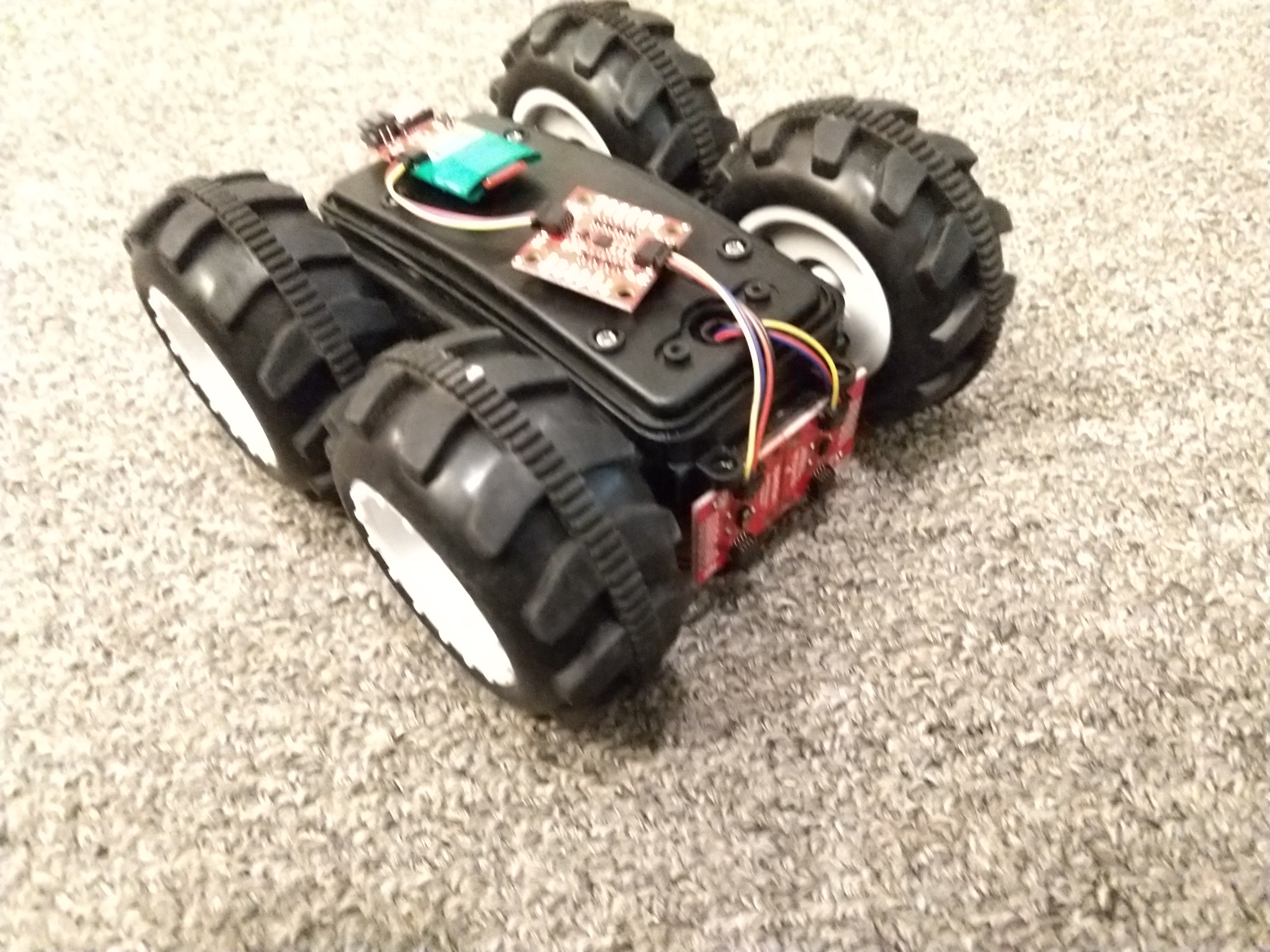

Both sensors are taped securely on the front using the double-sided tape.

The IMU and the Artemis board are also taped to the top. Unfortunately, the tape isn’t that sticky and the top is slippery, so they keep coming off. I intend to use duct tape again after the next lab.

The LiPo battery is taped to the bottom of the robot. This eliminates mess on the top and makes it easier to unplug or replace the battery without removing the Artemis. It also allows the Artemis USB-C port to face off the edge of the robot, so I can easily plug and unplug it to upload code.

Figure 7. Front of robot, showing rangefinders (front), IMU (top, front), and RedBoard Artemis (top, back).

Figure 7. Front of robot, showing rangefinders (front), IMU (top, front), and RedBoard Artemis (top, back).

Figure 8. Rear of robot, showing RedBoard Artemis (top, front), IMU (top, back), and LiPo battery (underneath, unplugged.)

Figure 8. Rear of robot, showing RedBoard Artemis (top, front), IMU (top, back), and LiPo battery (underneath, unplugged.)

Obstacle Avoidance

Unfortunately, I spent a long time taking data and didn’t get obstacle avoidance working in time. Below, I made the mistake of using a binary on-off speed control with too long of a minimum range.

I was able to drive faster and still be able to stop when I set the robot to control its speed by how far away it was from an obstacle.

Backing up while turning gave even better results. Note the deceleration as it approaches an obstacle.

The robot can drive pretty fast without hitting the wall. Below is a video of it driving down a 0.4m tape strip. Note the deceleration.

This one is faster, after I get the robot to aim the right direction:

See all my range measurements, pictures, videos, and code here on GitHub.

Physical Robot - Data

Ex post facto, I realized that I typed square brackets instead of parentheses when inserting my graphs. See above for the data which was there, but not there. Adding to Lessons Learned.

Simulation

This was a simple exercise after previous labs. I found that it was much easier to dodge walls when the robot traveled in arcs, so I set it to always drive in long arcs since it’s impossible to be perfectly parallel to a wall.

def perform_obstacle_avoidance(robot):

while True:

# Obstacle avoidance code goes here

if robot.get_laser_data()<0.5:

robot.set_vel(0.0,0.5) # turn

initialAngle = robot.get_pose()[2]

angleTurned = 0

while angleTurned < 0.5: # turn about 30 degrees, then read again

angleTurned = robot.get_pose()[2]-initialAngle

robot.set_vel(0,0)

else:

robot.set_vel(0.5,0.05) # drive in a slight curve since we will

# never be exactly parallel to a wall

time.sleep(0.1)

perform_obstacle_avoidance(robot)

The turning function uses a while loop to ensure it turns (at least) 30 degrees. Since this simulated robot starts and stops basically instantly, it will turn exactly 30 degrees.

The reasoning for this is to allow the robot to “follow” walls to which it is neither perpendicular nor parallel. (Good practice for my room with lots of angles.) However, in this perfect simulated environment, all the surfaces are perpendicular to each other. A simplified version and its performance are shown below.

See all my code here on GitHub. Also see additional things I learned and notes in the Other Lessons Learned page.

Lab 6

Materials Used

- Robot from lab 5, containing

- Driving base of RC car

- 4.8V NiCd battery

- SparkFun Serially Controlled Motor Driver (SCMD)

- SparkFun VCNL4040 IR proximity sensor breakout board

- SparkFun VL53L1X IR rangefinder breakout board

- SparkFun ICM-20948 9 DoF IMU breakout board

- SparkFun RedBoard Artemis

- 3.7v LiPo battery

- 4 short SparkFun Qwiic (I²C) connector cables (or long cables and zip ties)

- Electrical tape, duct tape, and sticky pads (3M)

- Computer with the following software:

- Course Ubuntu 18.04 VM (installed in Lab 1)

- Arduino IDE with Artemis RedBoard Nano board installed

- At least the following Arduino libraries:

- Core libraries (Arduino, etc.)

- Serial Controlled Motor Driver (Sparkfun)

- SparkFun 9DoF IMU Breakout - ICM 20948 - Arduino Library

- SparkFun VCNL4040 Proximity Sensor Library

- SparkFun VCNL53L1X 4m Laser Distance Sensor

- Optional: SerialPlot - also on sourcehut

- USB A-C cable (for a computer with USB A ports)

Procedure

Physical Robot (part a)

Reviewed the Sparkfun product info and documentation for the ICM-20948 IMU. Noted (among other things) that the default I²C address is 0x69.

With the SparkFun 9DoF IMU Breakout - ICM 20948 - Arduino Library installed, and the IMU connected to the Artemis via a Qwiic cable, ran the Example1_Wire Arduino sketch (Arduino IDE: File > Examples > Wire (under “Examples for Artemis RedBoard Nano”). Confirmed the default I²C address.

Ran the Example1_Basics Arduino sketch (accessed from the IDE by File > Examples > SparkFun 9DoF IMU Breakout - ICM 20948 - Arduino Library). Confirmed that the IMU read out sensible values on all nine axes.

Wrote code to calculate the tilt angle using the accelerometer. Wrote code to calculate roll, pitch, and yaw using the gyroscope. Wrote code to calculate the yaw angle using the magnetometer. Wrote sensor fusion code using the accelerometer and the gyroscope.

Added features to the Bluetooth communication code allowing it to send IMU data and to receive motor commands over Bluetooth. Modified command set to add a “ramp” command (optional) and wrote Python code to spin in place, ramping the motor values from zero to maximum and back again. Collected IMU rotation rate data from the spin experiment. Wrote Python code to spin the robot at a constant speed; adjusted this speed to find a minimum and measured it using IMU data. Wrote Python code to implement a PI control of the rotation rate.

Simulation (part b)

Downloaded and extracted the lab six base code. In the VM, entered the folder and ran ./setup.sh. Closed and reopened the terminal window. Started lab6-manager and robot-keyboard-teleop. Entered /home/artemis/catkin_ws/src/lab6/scripts and started jupyter lab. Ensured the notebook kernel used Python 3.

Followed the notebook instructions.

Results and Notes

Yes, the Wire code found an I²C device at 0x69. It also still recognized the other three I²C devices at the same default addresses. I won’t bore you with the output.

There was a magnet in my computer (to hold the lid shut.) The magnetometer readings near that magnet changed drastically as I moved the IMU from one side of the magnet to the other. All other readings were sensible (with constant offsets.) Below are demos of accelerometer and gyroscope readings.

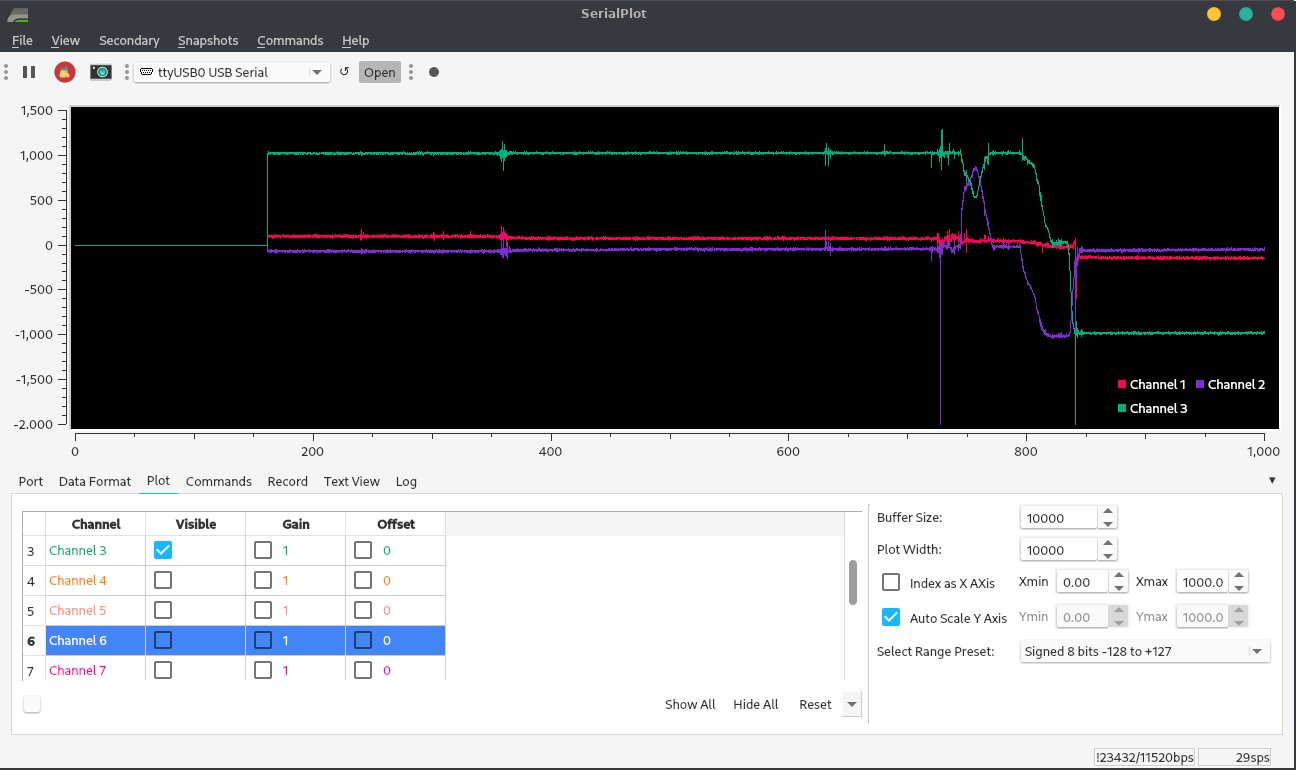

Figure 1. Accelerometer data when the robot is tilted, then flipped upside-down. The data is in milli-g’s; z is facing down when the robot is level. The axis facing the ground reads 1 g when it’s head-on; when it isn’t, the gravitational acceleration is distributed among the axes.

Figure 1. Accelerometer data when the robot is tilted, then flipped upside-down. The data is in milli-g’s; z is facing down when the robot is level. The axis facing the ground reads 1 g when it’s head-on; when it isn’t, the gravitational acceleration is distributed among the axes.

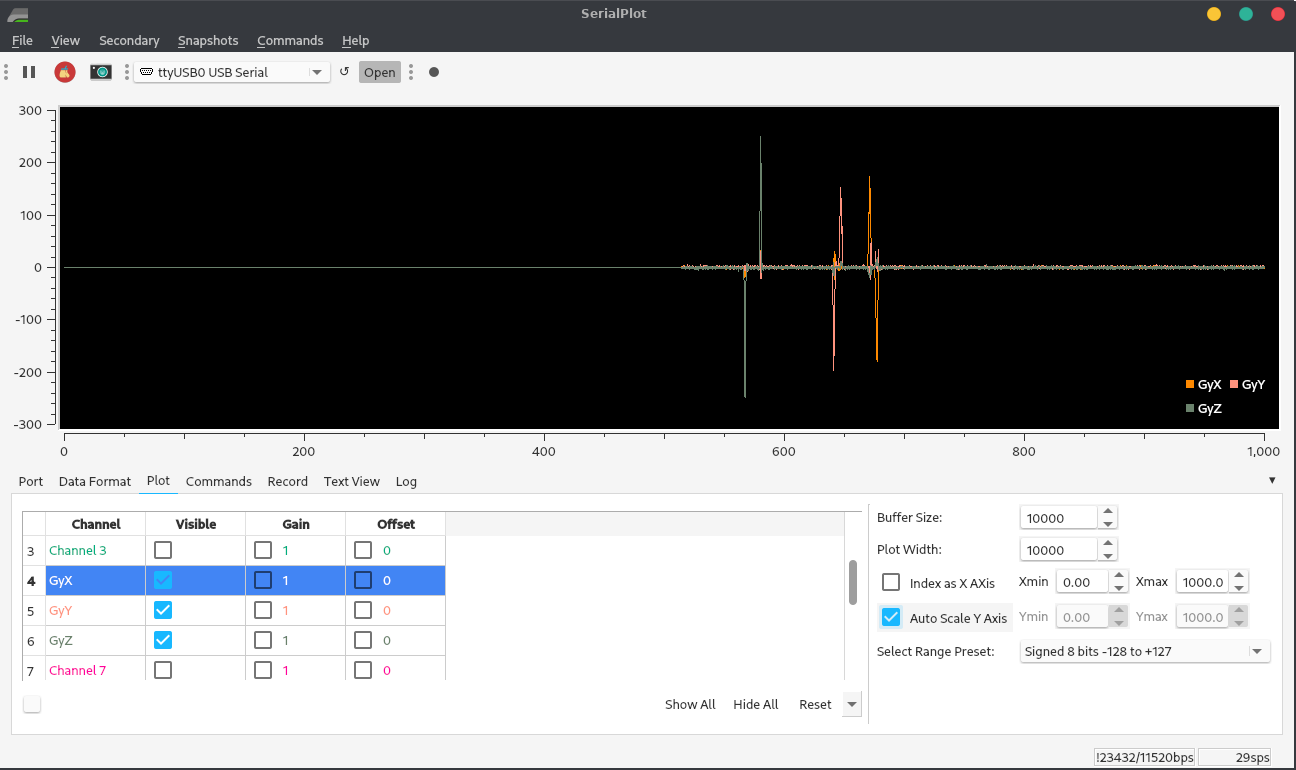

Figure 2. Accelerometer data when the robot is rotated about 90° about its three axes. The data is angular rate: the derivative of the robot’s three orientation angles. Matching the product datasheet, rotating it CCW about Z, and then back, gave a positive spike and then a negative spike with equal area. Rotating it CW about Y, and then back, gave it a negative spike and then a positive spike with equal area. Rotating it CCW about X, and then back, gave it a positive spike, and then a negative spike with equal area.

Figure 2. Accelerometer data when the robot is rotated about 90° about its three axes. The data is angular rate: the derivative of the robot’s three orientation angles. Matching the product datasheet, rotating it CCW about Z, and then back, gave a positive spike and then a negative spike with equal area. Rotating it CW about Y, and then back, gave it a negative spike and then a positive spike with equal area. Rotating it CCW about X, and then back, gave it a positive spike, and then a negative spike with equal area.

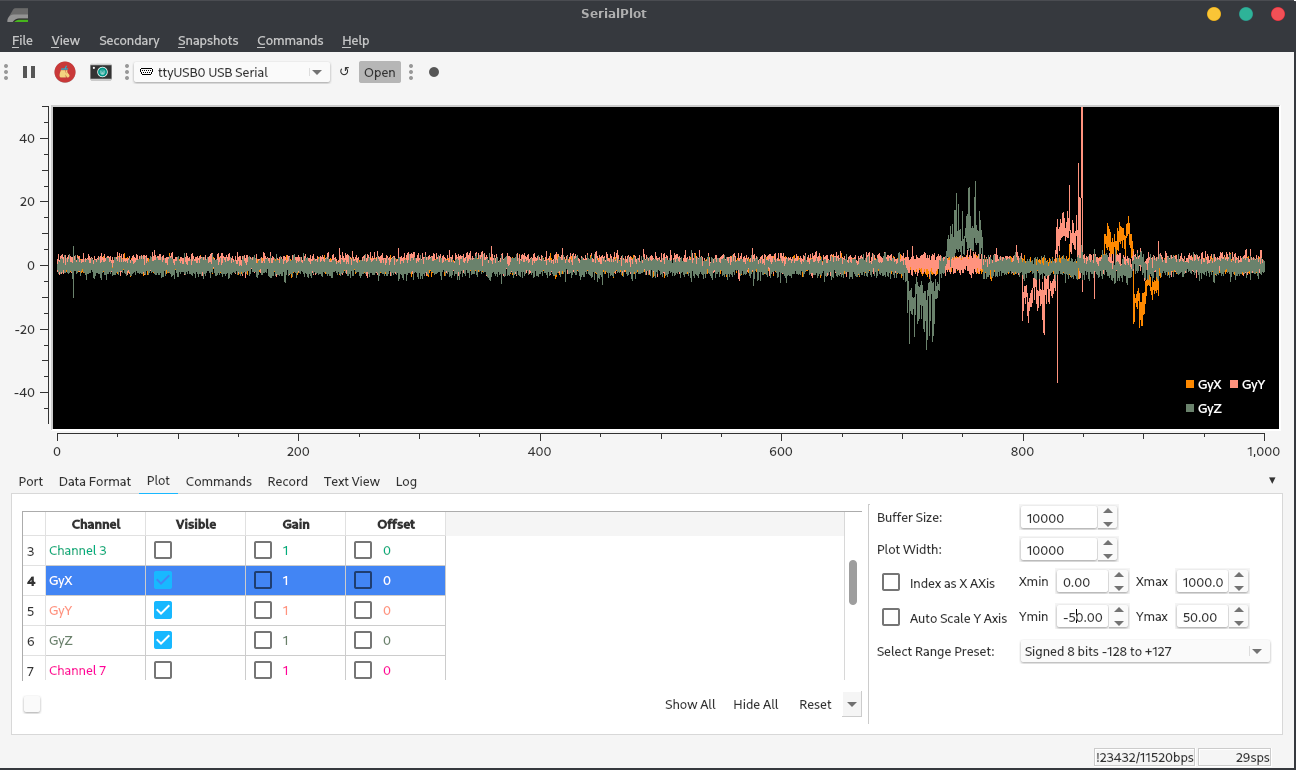

Figure 3. Bonus: if I rotate slowly, the peaks are the same area, but flattened out! The bigger peaks are from when my hand slipped and I bumped the Qwiic cable. Also note the noise in the background when the robot is stationary!

Figure 3. Bonus: if I rotate slowly, the peaks are the same area, but flattened out! The bigger peaks are from when my hand slipped and I bumped the Qwiic cable. Also note the noise in the background when the robot is stationary!

Finding Tilt from Accelerometer Data

At first, I tried finding angle using atan2. This was horribly noisy at 90° because the z acceleration, in the denominator, approached zero when the robot was tilted 90°: one axis would be accurate and the other would have nearly ±90° of noise. I solved this problem by dividing by the total acceleration and using asin instead:

aX = myICM.accX(); aY = myICM.accY(); aZ = myICM.accZ();

a = sqrt(pow(aX,2)+pow(aY,2)+pow(aZ,2));

roll = asin(aX/a)*180/M_PI;

pitch = asin(aY/a)*180/M_PI;

Serial.printf("%4.2f,%4.2f\n",roll,pitch);

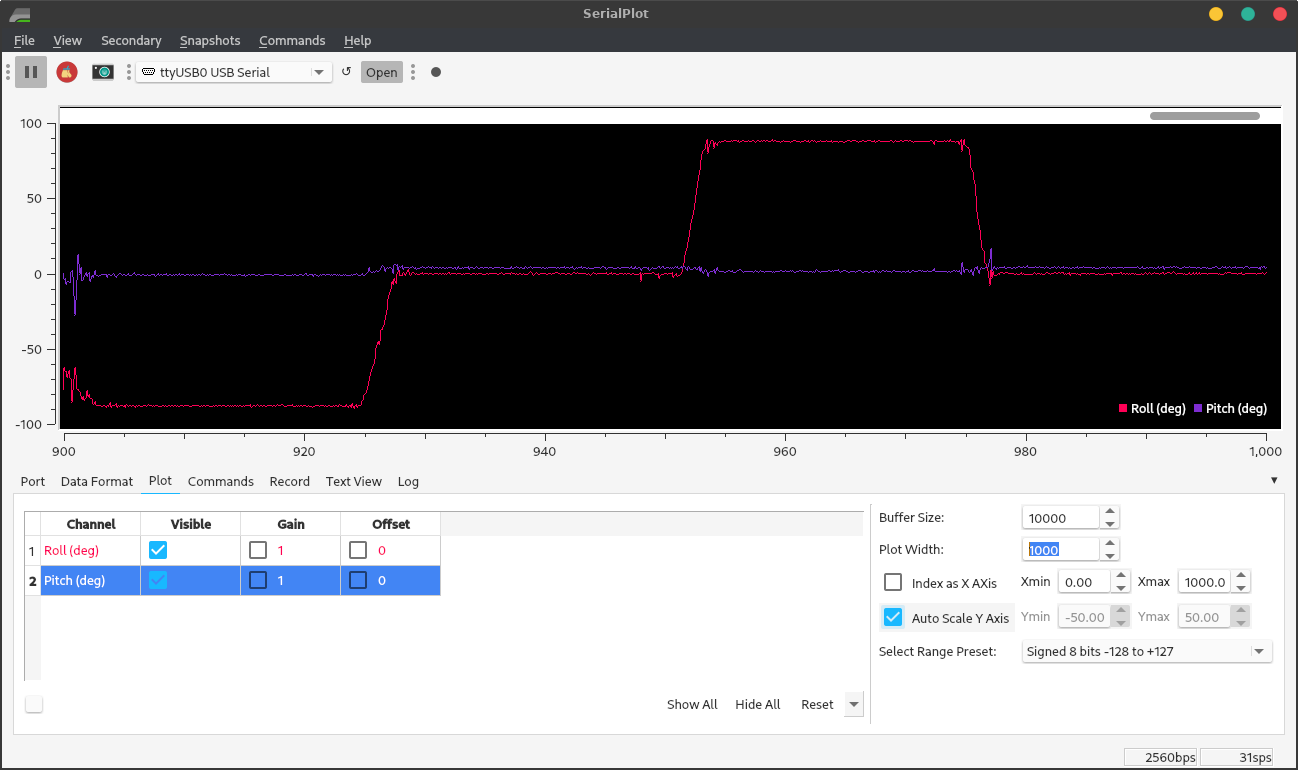

Below is the output as I moved from -90° to 0° to 90° in roll and pitch, respectively.

Figure 4. Accelerometer angle readings from roll at right angles.

Figure 4. Accelerometer angle readings from roll at right angles.

Figure 5. Accelerometer angle readings from pitch at right angles.

Figure 5. Accelerometer angle readings from pitch at right angles.

The accelerometer is quite accurate (though noisy.) Using SerialPlot, I found that it detected the 5° curve of the top of the robot. It consistently reads about 88° tilt when I tilt it 90° – probably for the same reason it reads more than 1 g when it’s level: calibration.

Finding Angles from Gyroscope Data

The gyroscope angles were much less noisy, since the angle is acquired by integrating the rate, the angle looks like it’s been through a low-pass filter. Precise calibration is difficult, since the gyroscope’s angular rate measurements change both in short and in long time spans. So, some drift in the readings is inevitable, but this calibration code worked well.

//// Calibrate gyroscope angles

//Serial.println("Calibrating gyro...");

int tCal = calTime*1000000; // 1 sec = 1000000 microseconds

float thXCal = 0, thYCal = 0, thZCal = 0; // angles to measure during calibration while the gyro is stationary

tNow = micros();

int tStartCal = tNow; // starting calibration now!

while(tNow-tStartCal < tCal){ // run for tCal microseconds (plus one loop)

tLast = tNow; // what was new has grown old

delay(tWait);

myICM.getAGMT(); // The values are only updated when you call 'getAGMT'

tNow = micros(); // update current time

dt = (float) (tNow-tLast)*0.000001; // calculate change in time in seconds

omX = myICM.gyrX(); omY = myICM.gyrY(); omZ = myICM.gyrZ(); // omega's (angular velocities) about all 3 axes

thXCal = thXCal + omX*dt; // update rotation angles about X (roll)

thYCal = thYCal + omY*dt; // Y (pitch)

thZCal = thZCal + omZ*dt; // and Z (yaw)

}

// and we want the average rotation rate in deg/s after that loop

omXCal = thXCal / calTime;

omYCal = thYCal / calTime;

omZCal = thZCal / calTime;

//Serial.printf("Calibration offsets: X %6.4f, Y %6.4f, Z %6.4f degrees per second\n",omXCal,omYCal,omZCal);

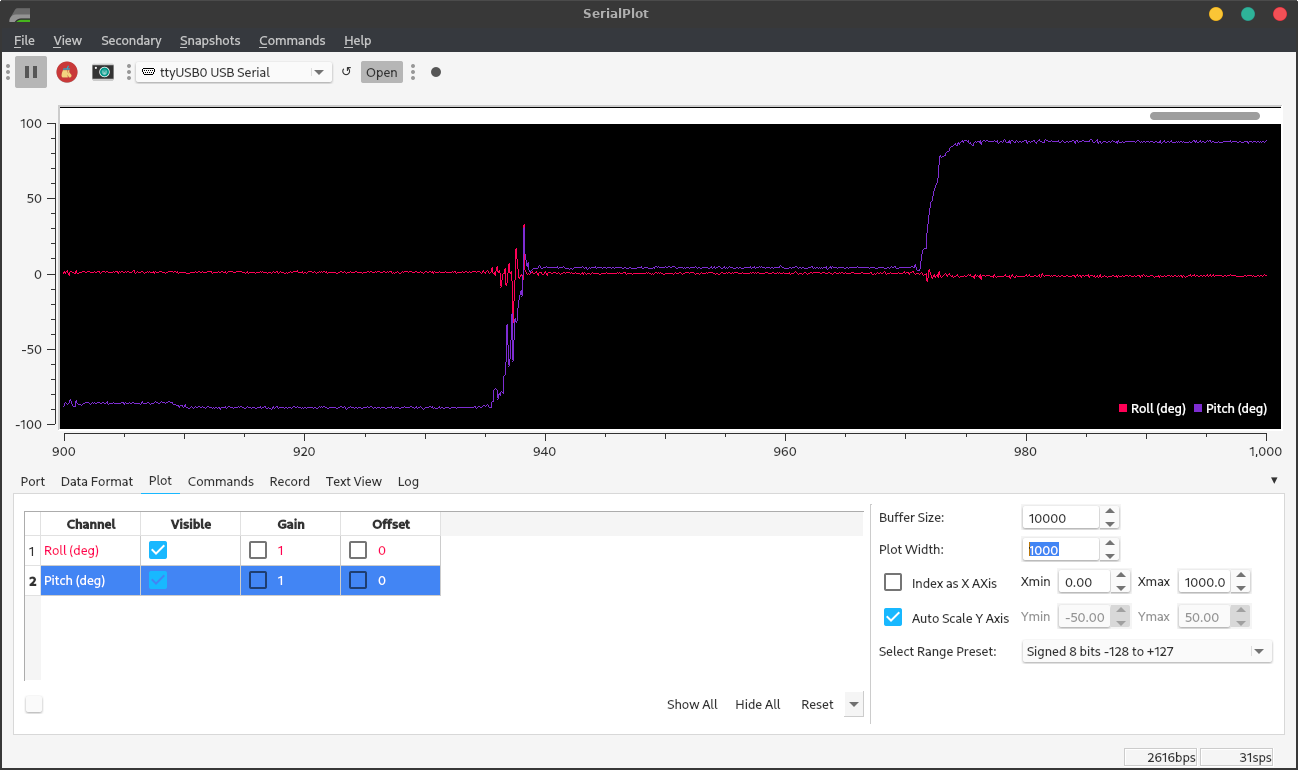

Below are the results of the same type of test as I ran on the accelerometer: rotating each axis to -90°, 0°, and +90°.

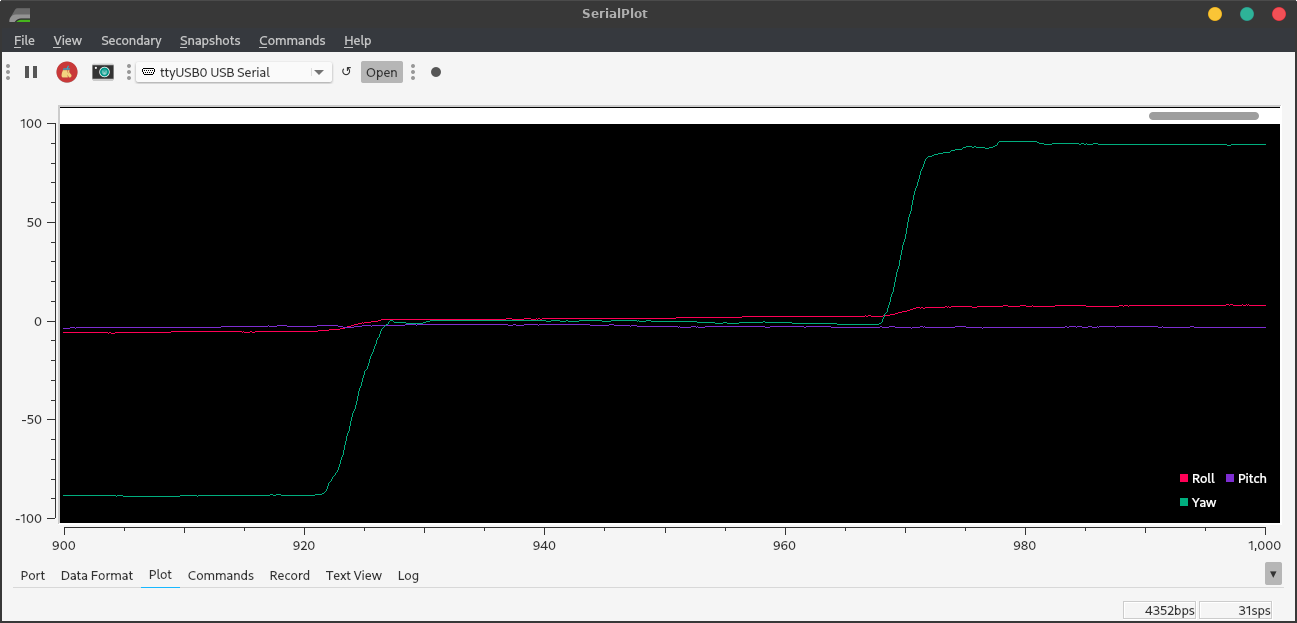

Figure 6. Gyro angle readings from roll at right angles.

Figure 6. Gyro angle readings from roll at right angles.

Figure 7. Gyro angle readings from pitch at right angles.

Figure 7. Gyro angle readings from pitch at right angles.

Figure 8. Gyro angle readings from yaw at right angles.

Figure 8. Gyro angle readings from yaw at right angles.

Note how stable the readings are, compared to those of the accelerometer. The wiggles in the graph are real - I was repositioning the robot or not holding it perfectly still. However, the gyro readings can be ruined by bad calibration.

Figure 9. Calibration doesn’t always work.

Figure 9. Calibration doesn’t always work.

With sampling rates of 1 kHz or less, I noticed that the integration was always more accurate with a faster sampling rate, regardless of calibration time.

I tried removing delays, giving a sampling rate of about 10 kHz. The result was no more accurate, and there were massive spikes or sometimes step changes. I hypothesize that this happened when a noise spike coincided with a short loop cycle.

I left the wait time at 10ms (100Hz sampling rate) for the rest of the lab.

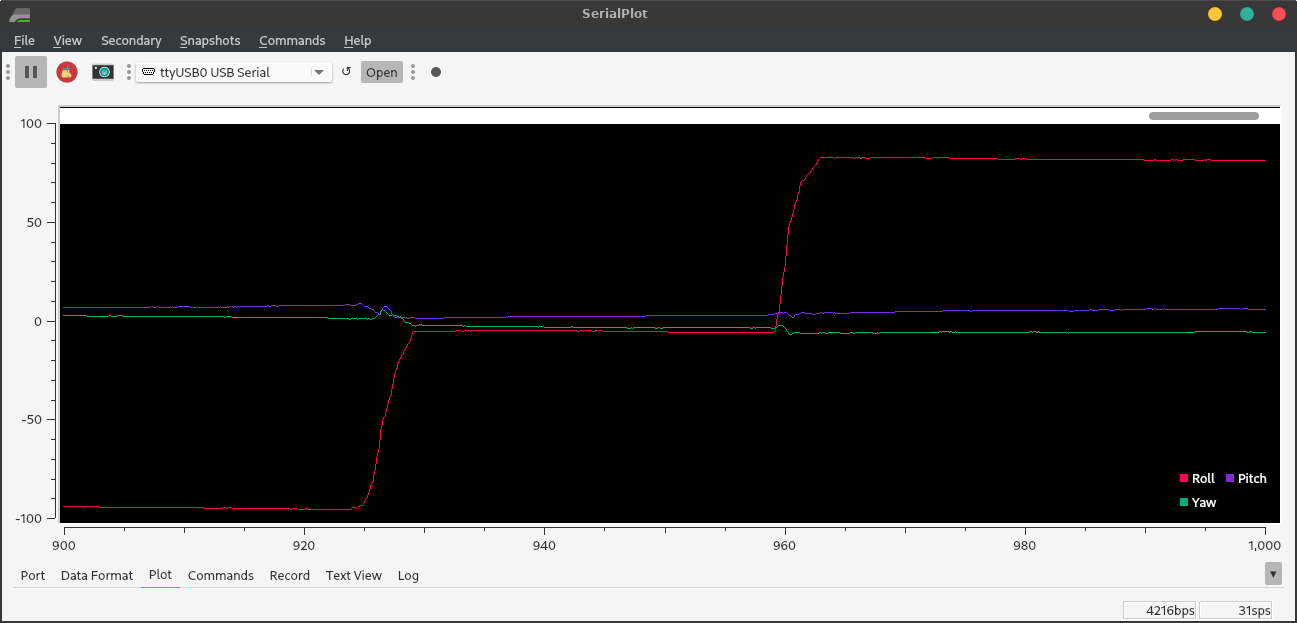

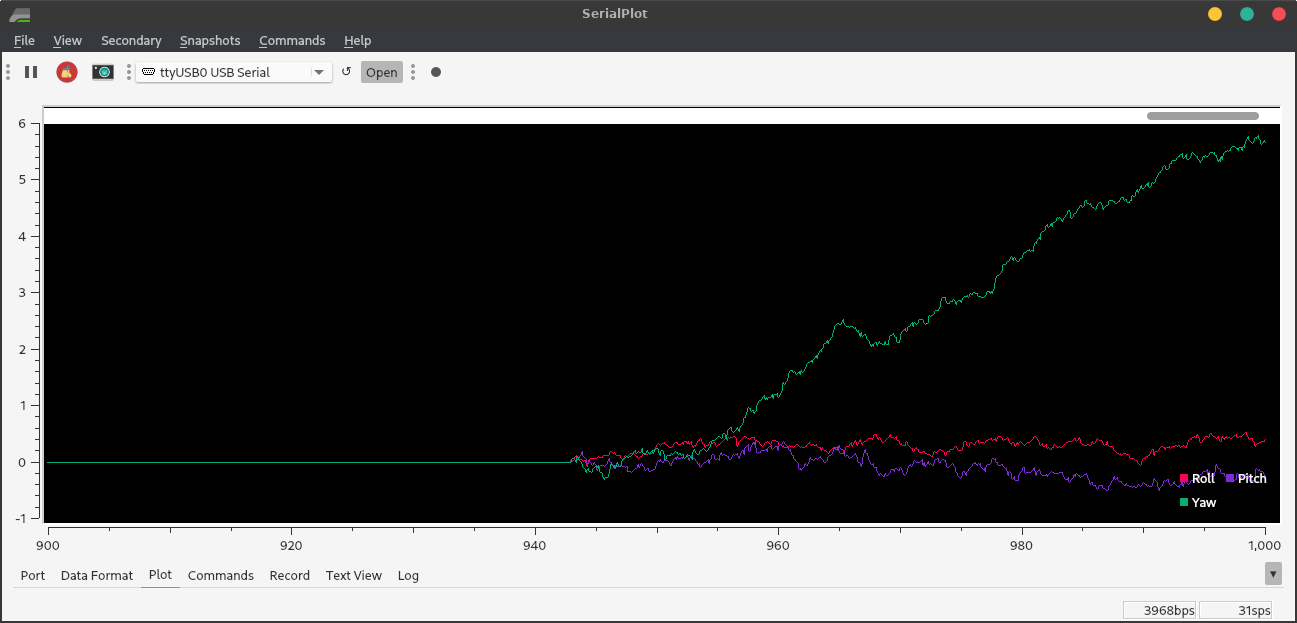

I got the best results thus far from a complementary filter with α=0.3 and a correction factor of 1 for the accelerometer. α=0.5 works fine, but is noisy. The main bumps in this graph are from my imperfect movements. Drift is about 1° per minute for yaw and zero for the other axes.

Figure 10. Roll, pitch, and yaw tests; roll and pitch have a complementary filter with α=0.3 in favor of the gyroscope.

Figure 10. Roll, pitch, and yaw tests; roll and pitch have a complementary filter with α=0.3 in favor of the gyroscope.

if( myICM.dataReady() ){

myICM.getAGMT(); // The values are only updated when you call 'getAGMT'

tLast = tNow; // the new has grown old

tNow = micros();

dt = (float) (tNow-tLast)*0.000001; // calculate change in time in seconds

// printRawAGMT( myICM.agmt ); // Uncomment this to see the raw values, taken directly from the agmt structure

// printScaledAGMT( myICM.agmt); // This function takes into account the scale settings from when the measurement was made to calculate the values with units

aX = myICM.accX(); aY = myICM.accY(); aZ = myICM.accZ();

omX = myICM.gyrX()-omXCal; // omega's (angular velocities) about all 3 axes

omY = myICM.gyrY()-omYCal;

omZ = myICM.gyrZ()-omZCal;

thX = thX + omX*dt; // update rotation angles about X (roll)

thY = thY + omY*dt; // Y (pitch)

thZ = thZ + omZ*dt; // and Z (yaw)

a = sqrt(pow(aX,2)+pow(aY,2)+pow(aZ,2)); // calculate total acceleration

rollXL = asin(aY/a)*compFactorRoll*rad2deg;

pitchXL = asin(aX/a)*compFactorPitch*rad2deg;

roll = alpha*rollXL + (1-alpha)*thX; // complementary filter

pitch = alpha*pitchXL + (1-alpha)*thY;

thX = roll; thY = pitch; // overwrite any gyro drift

yaw = thZ; // still waiting for the magnetometer

Serial.printf("%4.2f,%4.2f,%4.2f\n",roll,pitch,yaw); // and print them

delay(tWait);

}

Finding Angles from the Magnetometer

Besides obvious things like DC motors, there are magnets to hold our laptops shut, magnets in screwdrivers, and lots of steel beams in buildings. Moving the IMU into the center of my room, away from known magnets, I still saw a constant magnetic field of 50 μT into the floor. Worse, the field near the DC motors is about 1000 μT compared to 25-65 μT from Earth.

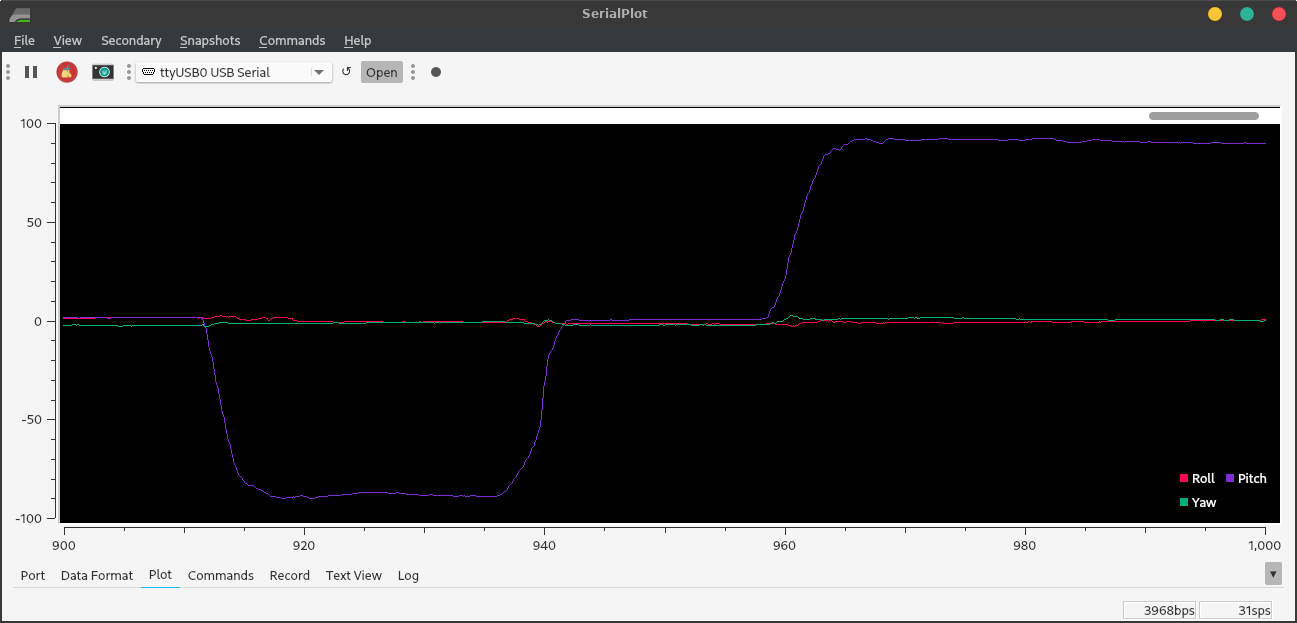

Figure 11. Magnetometer readings on top of the chassis, sitting still and pointing nearly north.

Figure 11. Magnetometer readings on top of the chassis, sitting still and pointing nearly north.

A miniscule ~60 Hz oscillation is superimposed on the constant field. But, when I roll the robot back and forth with my hand:

Figure 12. Magnetometer readings when backdriving the motors.

Figure 12. Magnetometer readings when backdriving the motors.

I was able to calibrate away all the constant fields and achieve sensible angle readings when the wheels didn’t turn. However, the change in magnetic field when the wheels turn is of similar magnitude to that of the Earth (about 20 μT amplitude) so I couldn’t get reliable magnetometer readings.

Figure 13. Magnetometer angle readings: decently accurate until the motors turn.

Figure 13. Magnetometer angle readings: decently accurate until the motors turn.

//// Calibrate gyroscope and magnetometer readings

// For magnetometer calibration to work, it must begin pointing north.

//Serial.println("Point me north!")

//delay(1000);

Serial.println("Calibrating...");

int tCal = calTime*1000000; // 1 sec = 1000000 microseconds

float thXCal = 0, thYCal = 0, thZCal = 0; // angles to measure during calibration while the gyro is stationary

tNow = micros();

int tStartCal = tNow; // starting calibration now!

while(tNow-tStartCal < tCal){ // run for tCal microseconds (plus one loop)

if( myICM.dataReady() ){

tLast = tNow; // what was new has grown old

delay(tWait);

myICM.getAGMT(); // The values are only updated when you call 'getAGMT'

tNow = micros(); // update current time

dt = (float) (tNow-tLast)*0.000001; // calculate change in time in seconds

omX = myICM.gyrX(); omY = myICM.gyrY(); omZ = myICM.gyrZ(); // omega's (angular velocities) about all 3 axes

thXCal = thXCal + omX*dt; // update rotation angles about X (roll)

thYCal = thYCal + omY*dt; // Y (pitch)

thZCal = thZCal + omZ*dt; // and Z (yaw)

mXCal = mXCal + myICM.magX();

mYCal = mYCal + myICM.magY();

mZCal = mZCal + myICM.magZ();

count++; // total number of magnetometer readings taken (for gyro we care about rate; for mag we care about total)

}

}

// and we want the average rotation rate in deg/s after that loop

omXCal = thXCal / calTime;

omYCal = thYCal / calTime;

omZCal = thZCal / calTime;

mXCal = mXCal / count;

mXCal = mXCal - 40; // Compensate for the earth's magnetic field

mYCal = mYCal / count;

mZCal = mZCal / count;

//Serial.printf("Calibration offsets: X %6.4f, Y %6.4f, Z %6.4f degrees per second\n",omXCal,omYCal,omZCal);

// Loop: Read angles

if( myICM.dataReady() ){

myICM.getAGMT(); // The values are only updated when you call 'getAGMT'

tLast = tNow; // the new has grown old

tNow = micros();

dt = (float) (tNow-tLast)*0.000001; // calculate change in time in seconds

// printRawAGMT( myICM.agmt ); // Uncomment this to see the raw values, taken directly from the agmt structure

// printScaledAGMT( myICM.agmt); // This function takes into account the scale settings from when the measurement was made to calculate the values with units

aX = myICM.accX(); aY = myICM.accY(); aZ = myICM.accZ();

omX = myICM.gyrX()-omXCal; // omega's (angular velocities) about all 3 axes

omY = myICM.gyrY()-omYCal;

omZ = myICM.gyrZ()-omZCal;

mX = myICM.magX()-mXCal; // m's (normalized magnetometer readings) along all 3 axes

mY = myICM.magY()-mYCal;

mZ = myICM.magZ()-mZCal;

thX = thX + omX*dt; // update rotation angles about X (roll)

thY = thY + omY*dt; // Y (pitch)

thZ = thZ + omZ*dt; // and Z (yaw)

a = sqrt(pow(aX,2)+pow(aY,2)+pow(aZ,2)); // calculate total acceleration

rollXL = asin(aY/a)*compFactorRoll*rad2deg;

pitchXL = asin(aX/a)*compFactorPitch*rad2deg;

roll = alpha*rollXL + (1-alpha)*thX; // complementary filter

pitch = alpha*pitchXL + (1-alpha)*thY;

thX = roll; thY = pitch; // overwrite any gyro drift

//yaw = thZ; // not doing complementary filter yet

rollRad = roll*deg2rad; pitchRad = pitch*deg2rad;

xm = mX*cos(pitchRad) + mZ*sin(pitchRad);

ym = mY*cos(rollRad) + mZ*sin(rollRad);

mTotal = sqrt(pow(xm,2)+pow(ym,2)); // total in-plane magnetic field

yaw = atan2(ym, xm)*rad2deg; // noisy near asymptotes

//yaw = acos(xm/mTotal)*rad2deg; // will this work? (no)

Serial.printf("%4.2f,%4.2f,%4.2f\n",roll,pitch,yaw); // and print them

delay(tWait);

PID Control

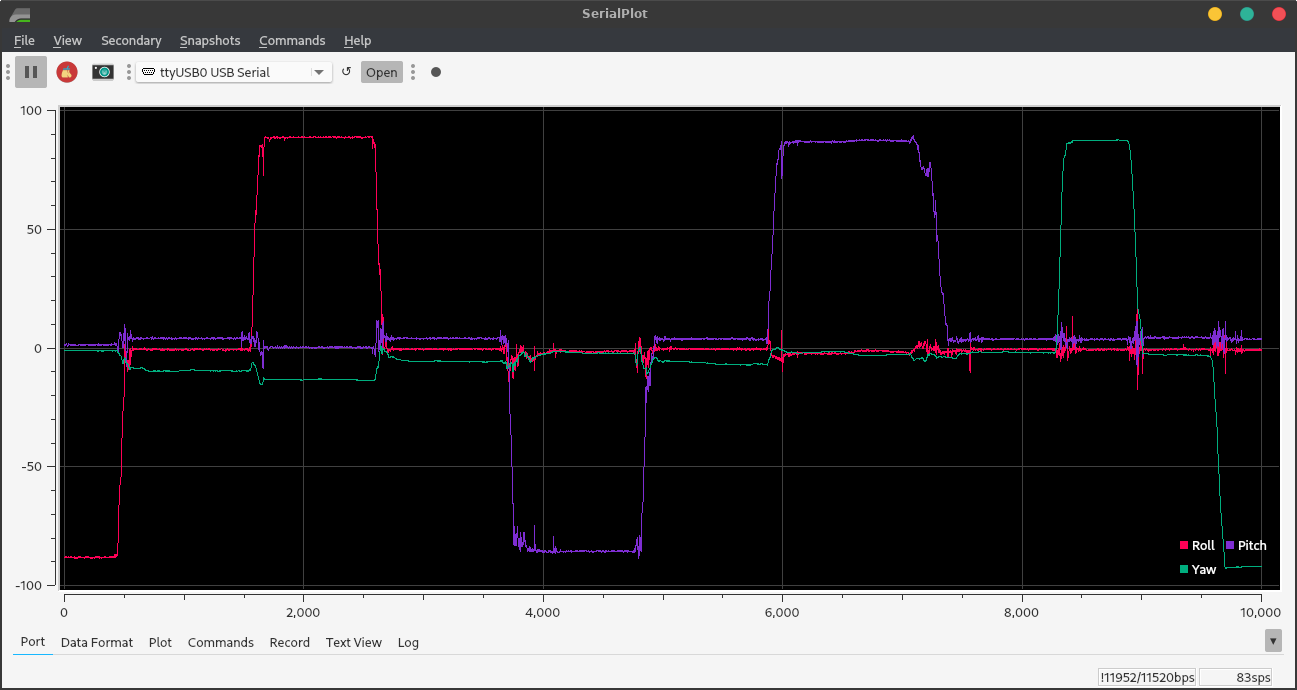

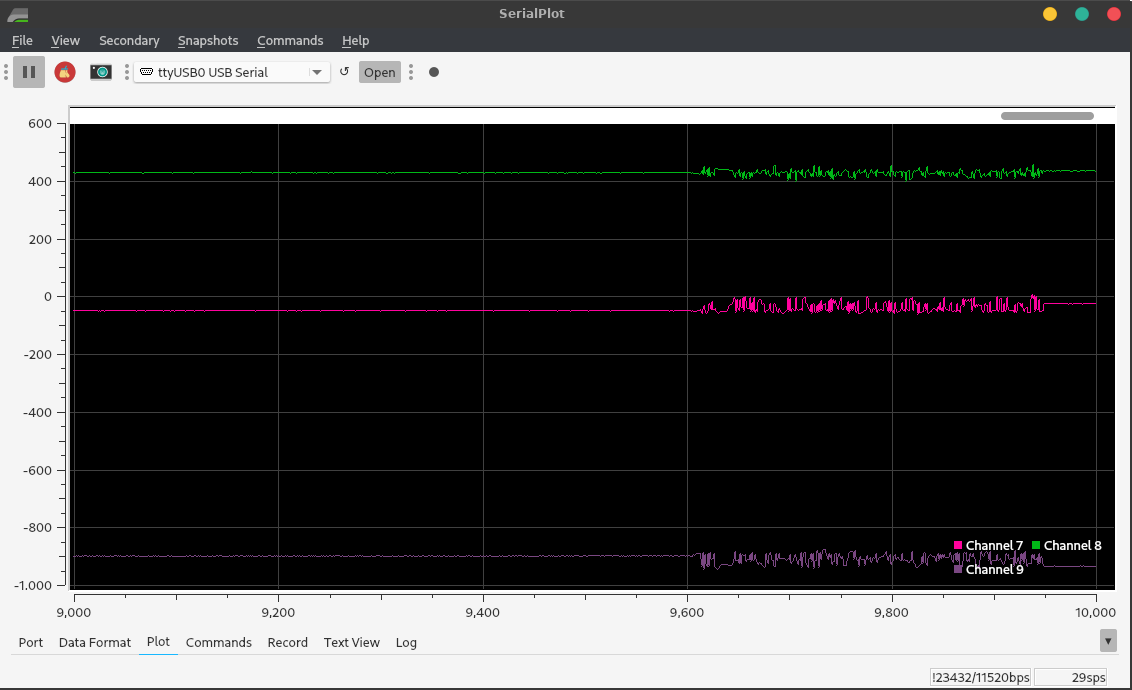

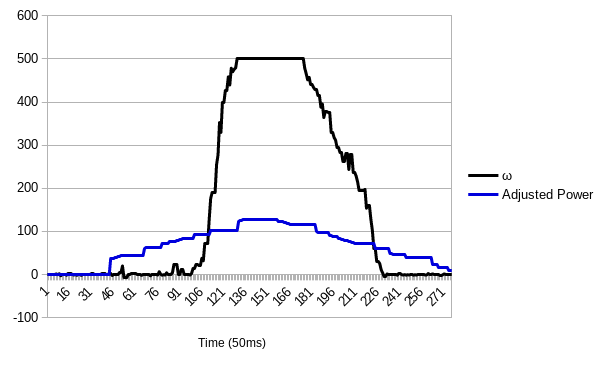

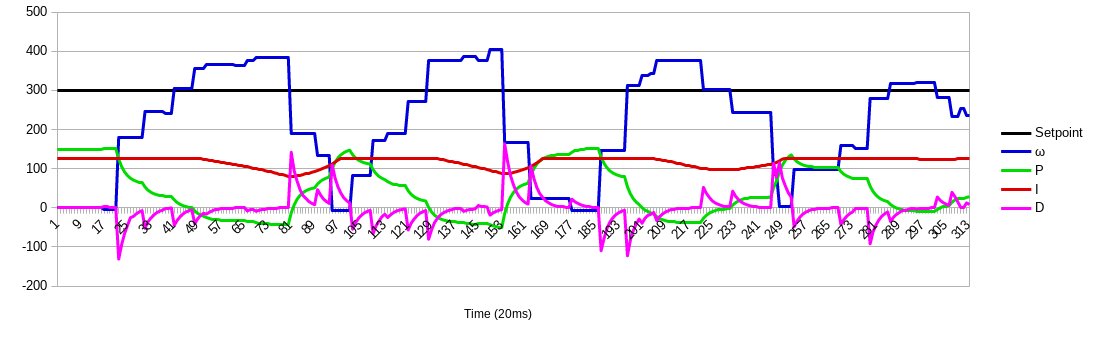

Below is my data from a smooth ramp that incremented/decremented the motor power every 50 ms. Since the right and left drive power values were the same, the robot made a bad point turn; the left wheel tended to start spinning before the right. The below graph shows one of several tries. I chose a maximum of 500°/s based on testing on asphalt; clearly, the maximum speed is different on a kitchen floor.

Figure 14. Open-loop ramp response. Note the deadband in the beginning. The gyro maxed out at 500°/s. The two wheels started spinning at nearly the same time. The bumps in the motor power graph are due to lost Bluetooth communication at a distance of 8ft. “Adjusted” power is scaled to ±127.

Figure 14. Open-loop ramp response. Note the deadband in the beginning. The gyro maxed out at 500°/s. The two wheels started spinning at nearly the same time. The bumps in the motor power graph are due to lost Bluetooth communication at a distance of 8ft. “Adjusted” power is scaled to ±127.

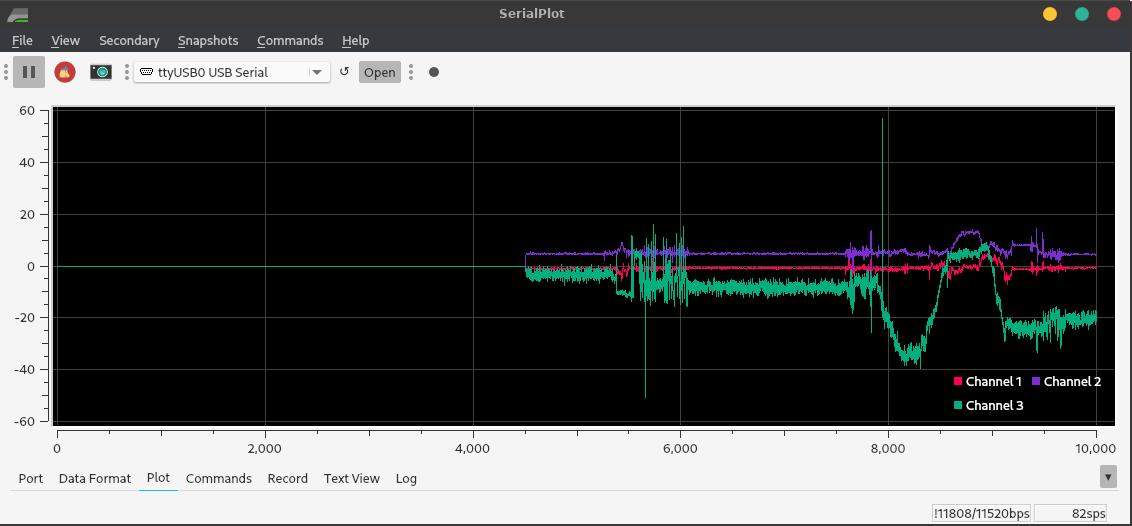

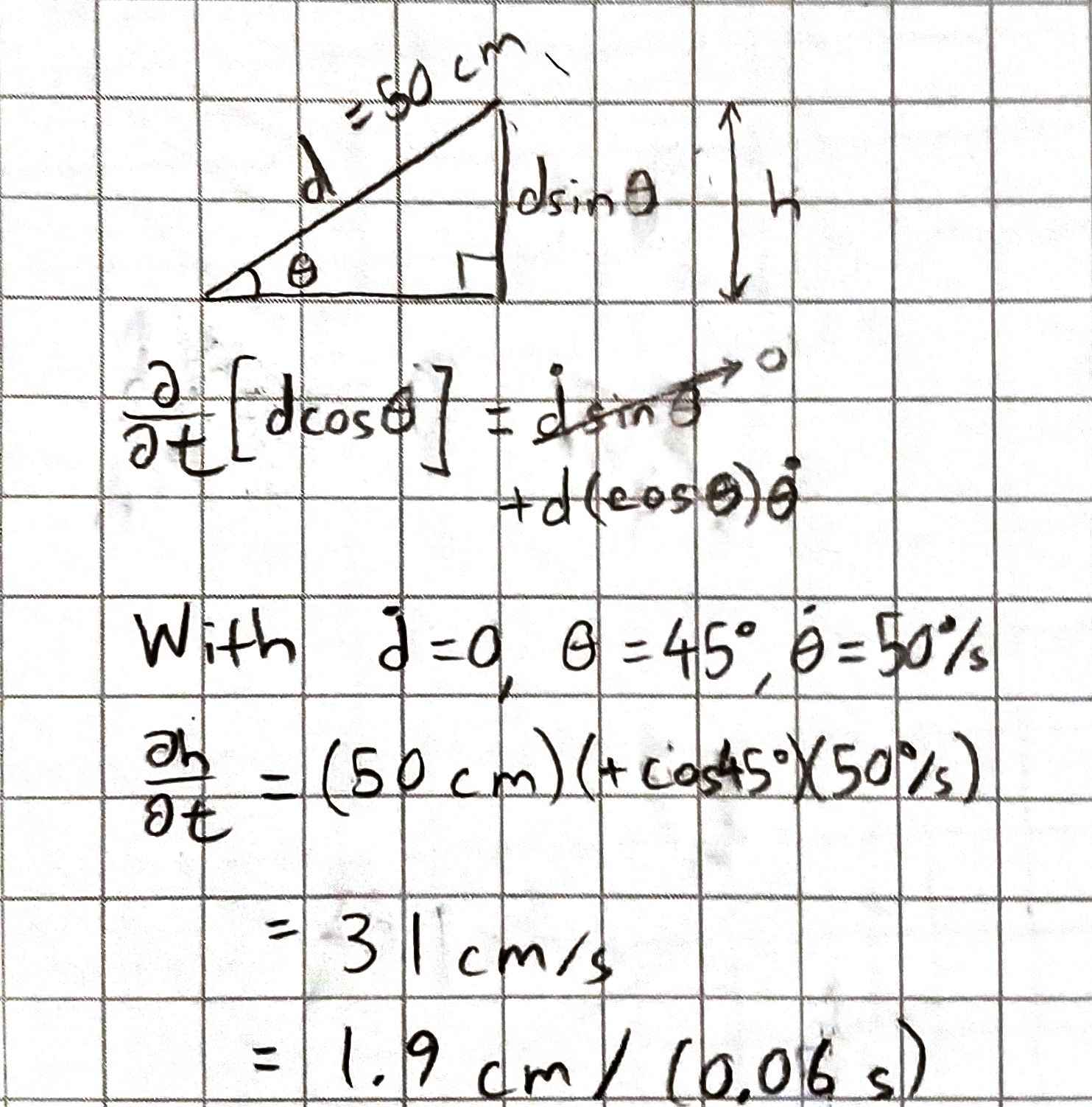

On a smooth tile floor, I found that the minimum rotational speed I could maintain was about 250°/s! This minimum speed would indicate that the points detected by the ToF sensor will be far apart. I found that the optimal sampling time for the ToF is 50-60 ms; per the datasheet, the minimum sampling time is 20 ms. Thus, I calculated the average distance between sensed points as 11 cm! A little noise will significantly affect the generated map.

Figure 15. Open-loop step response of the robot with minimum motor power required to keep it spinning on a tile floor. Note the bumps: the robot drove over gaps between tiles.

Figure 15. Open-loop step response of the robot with minimum motor power required to keep it spinning on a tile floor. Note the bumps: the robot drove over gaps between tiles.

To accurately spin the robot in place, I chose to use PI control (no derivative) because the robot is a first-order system and its transfer function is already stable without adding derivative. Below are some graphs that demonstrate my tuning process: raise Kₚ until the system rings, reduce Kₚ, raise Kᵢ until it overshoots too much, drop Kᵢ, and raise Kᵢ again. I used only the gyroscope to measure yaw rates. The below PID loop, run online using Bluetooth, could not react fast enough to the light robot’s sudden changes in speed. The graph is the best I could do at an intermediate speed of 300°/s.

Figure 16. Step response of the robot using a PI controller running on the computer in Python.

Figure 16. Step response of the robot using a PI controller running on the computer in Python.

# PID loop (currently PI)

global z, e, tNow, setpoint, inte, kp, ki, kd, xyzd, levels

while True:

await asyncio.sleep(0.05)

zLast = z

eLast = e

tLast = tNow

z = xyzd[2] # current sensor value

e = setpoint - z # error

tNow = time.clock_gettime(time.CLOCK_MONOTONIC)

dt = tNow - tLast

de = e - eLast # derivative of error

inte = inte + (e*dt) # integral term

if (inte > 127):

inte = 127 # positive windup control

elif (inte < -127):

inte = -127; # negative windup control

output = kp*e + ki*inte + kd*(de/dt)

# write motor power

output = output + 128 # centered at 127/128 rather than 0

if output < 0: # numbers in a bytearray are limited to [0,256]

output = 0

elif output > 255:

output = 255

output = int(output) # must be an integer

#outputL = int(output/2) # reduced power for left: drive in an arc

theRobot.updateMotor("left", output) # left is reversed

theRobot.updateMotor("right", output) # right is forward

# use "levels[1]" for current motor power reading from robot;

# use "output" for current scaled output from this code

motorPower = int((levels[1]-127.5)/1.27) # scale output to +/-100%

# Every loop, display desired speed, actual speed, and motor power

print("{},{},{}".format(setpoint,z,motorPower))

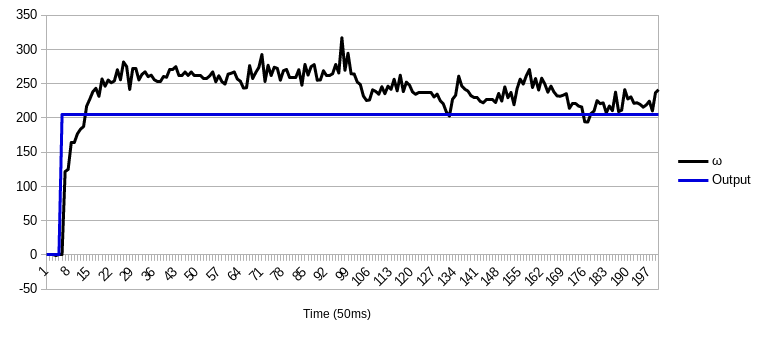

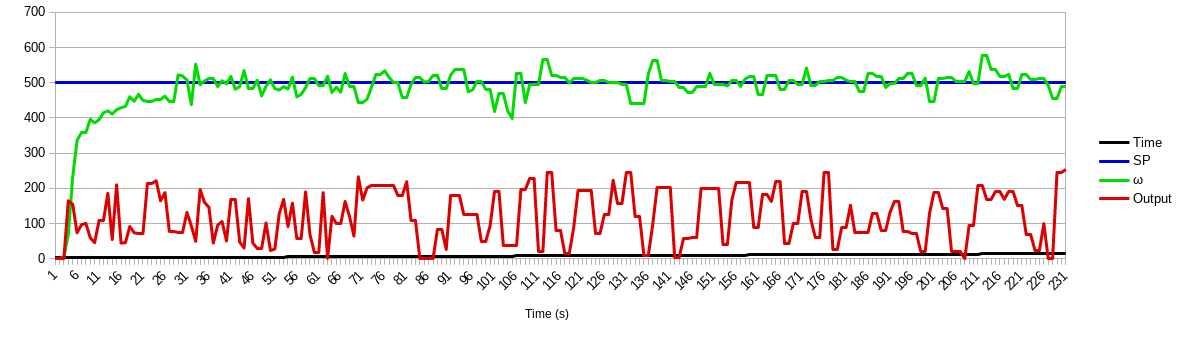

The PID controller worked much better when I loaded the PID control algorithm onto the robot instead, since there was no time delay due to latency. Below is a successful tuning graph:

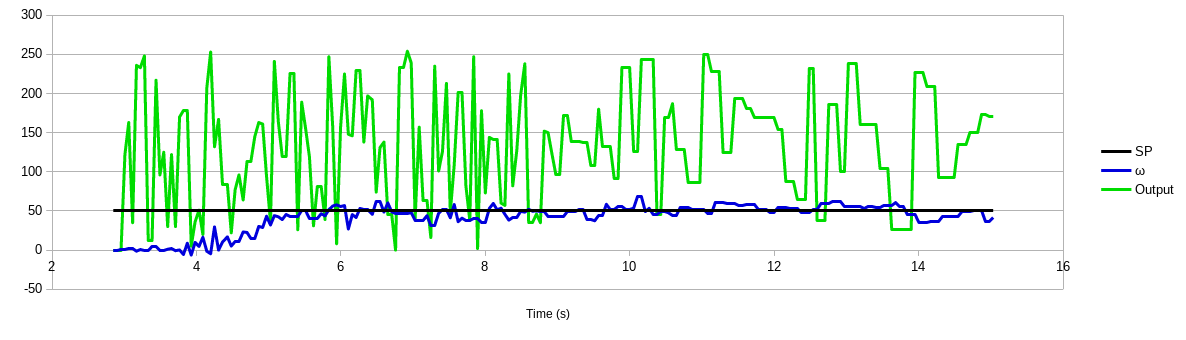

Figure 17. Step response of a PI controller running on the robot. Note I had to increase the range of the gyro to sense more than 500°/s.

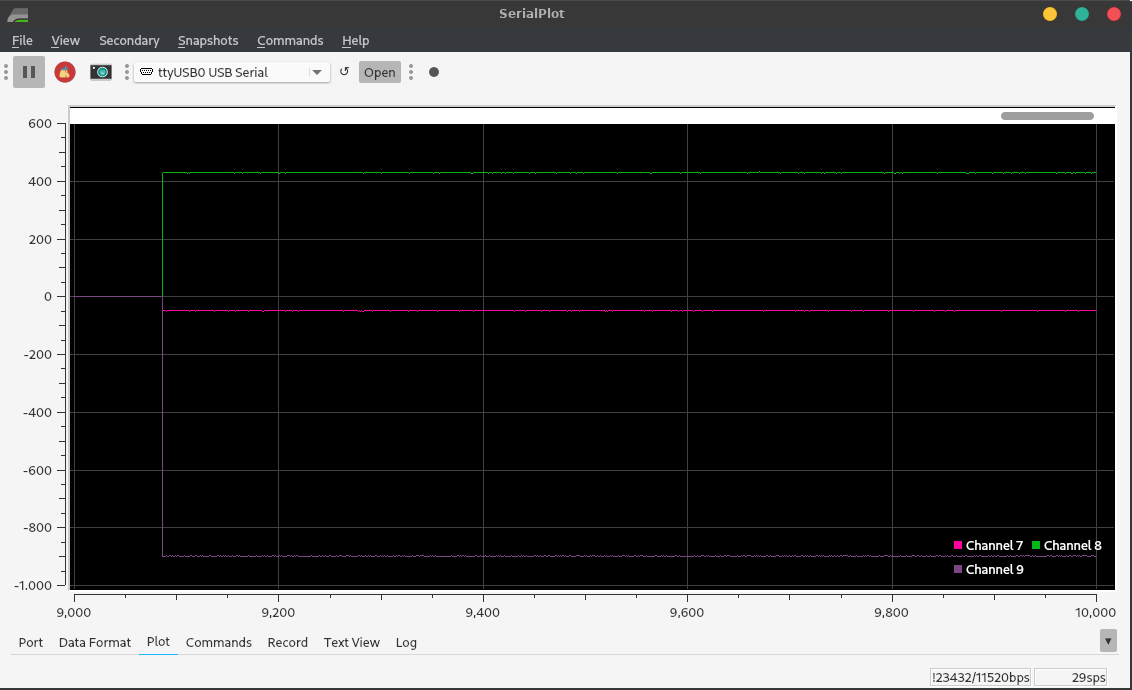

Figure 18. Moving much slower than was possible with open-loop control. Note that only one wheel was turning (even though I sent equal power to both.)

The minimum rotational speed I could maintain was now 50°/s using a PI loop with parameters kp = 0.75 and ki = 1.5 with a complementary lag filter using alpha = 0.5. This should allow much closer points, even with a 60ms timing budget, as shown below.

250°/s × (π rad / 180°) = 4.36 rad/s =

= 250°/s × (π rad / 180°) × 50 ms = 0.218 rad = 12.5°

= 250°/s × (π rad / 180°) × 50 ms × 0.5 m = 10.9 cm/s

= 50°/s × (π rad / 180°) × 50 ms × 0.5 m = 2.2 cm/s

= 50°/s × (π rad / 180°) × 60 ms × 0.5 m = 2.6 cm/s

Below is the PID controller written in Arduino:

if(pid){ // As long as we're running the controller,

eLast = e; // Update error (but don't update time - this is already done in the gyro section)

//tLast = t;

//t = micros();

//dt = (t - tLast)*0.000001;

e = alphaE*(setpoint-omZ)+(1-alphaE)*eLast; // lag filter on error

inte = inte + e*dt; // integral term

de = e - eLast; // numerator of deriv. term

if (inte > 255) // anti-windup control for integral

inte = 255;

else if (inte < -255)

inte = -255;

output = kp*e+ki*inte+kd*(de/dt); // calculate output

#ifdef SERIAL_PID

Serial.printf("P = %3.1f, I = %3.1f, D = %3.1f\n",kp*e, ki*inte, kd*(de/dt));

#endif

if (setpoint > 0){ // spinning clockwise (with IMU on bottom - CCW with IMU on top)

if (output > 255) // limit output to 1-byte range

output = 255;

else if (output < 0)

output = 0;

scmd.setDrive(left, lRev, output);

scmd.setDrive(right, rFwd, output);

}

else{ // spinning counterclockwise or stopping

if (output > 0) // limit output to 1-byte range

output = 0;

else if (output < -255)

output = -255;

scmd.setDrive(left, lFwd, -output); // and send the NEGATIVE of the output

scmd.setDrive(right, rRev, -output);

}

}

Simulation

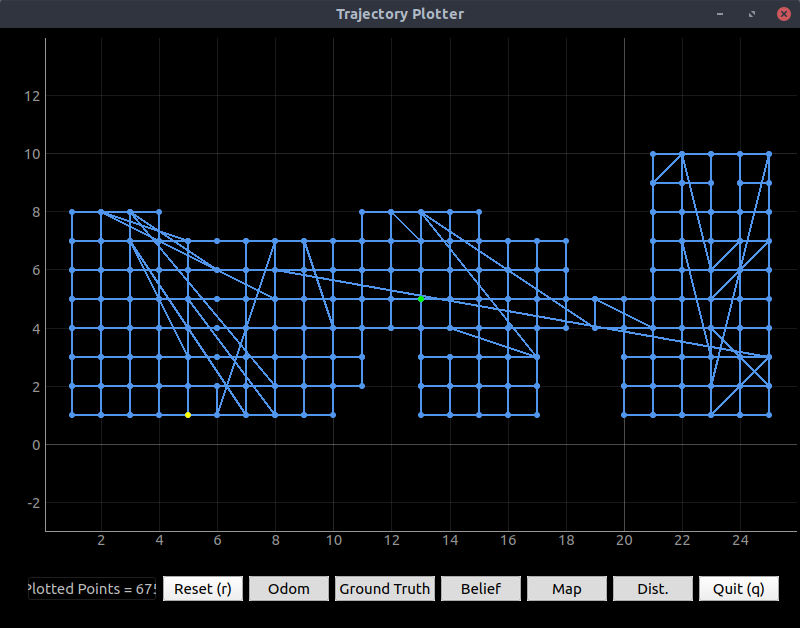

Based on the speed of readings I got in SerialPlot earlier in this lab, I reasoned that >10 points/sec was excessive. I also tried plotting rates of <10 points/sec: the dots were sparse when the robot drove faster. So, I stuck with 10 points/sec.

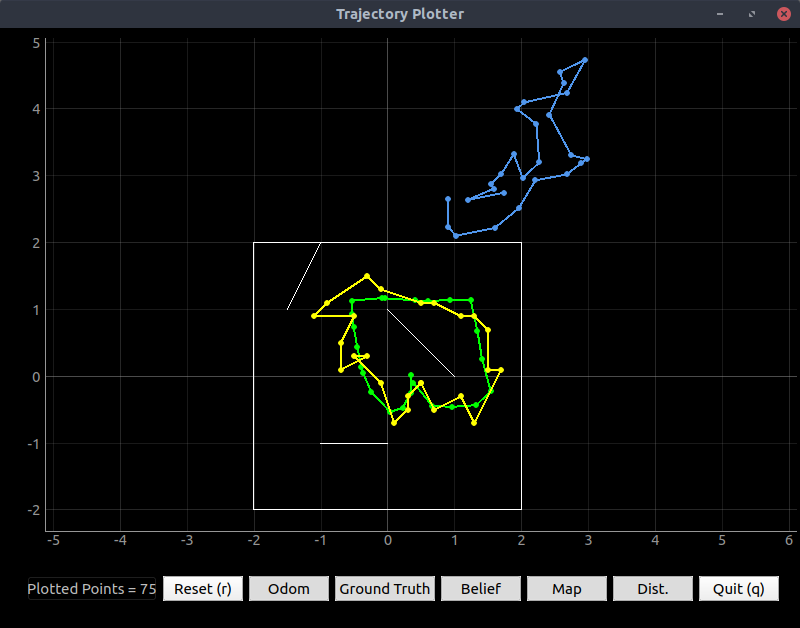

The odometry readings looked similar to the ground truth, but they were offset and rotated. The amount of the offset and rotation increased with time (even when the robot was stationary), as I would expect with IMU drift. The noise in the points was surprisingly small compared to the movement of the robot. Not surprisingly, dragging the robot around by hand completely threw off the odometry.

Note that the drift was worse (though the rate of drift was about equal) with very low speeds of 0.05 linear and 0.08 turn.

Figure 15. Ground-truth (green) and odometry (red) data from simulated robot.

Figure 15. Ground-truth (green) and odometry (red) data from simulated robot.

When I drove very fast (linear 6, angular 3), the pose estimate drifted less per distance traveled. But the rate of drift appears constant with time.

See all my results and code here on GitHub. The Data.ods spreadsheet contains many commented PID tuning graphs. Also see additional things I learned and notes in the Other Lessons Learned page. I spent a lot of this lab’s time trying to relay commands and data successfully over Bluetooth, so I added a “Bluetooth” section which explains the setup, what I learned about the asyncio library, and so on.

Lab 7

Note: Used the same materials from Lab 6 until Lab 12.

Lab 7a: Procedure

Downloaded the lab 7 base code; extracted; and ran lab7_base_code/setup.sh in the VM. Restarted terminal emulator; entered ~/catkin_ws/src/lab7/scripts/; and ran jupyter lab. In another terminal window, ran lab7-manager and started both the simulator and the plotter.

Followed the instructions in the Jupyter lab; wrote the pseudocode below to describe the Bayes filter algorithm.

Lab 7a: Results

# In world coordinates

def compute_control(cur_pose, prev_pose):

""" Given the current and previous odometry poses, this function extracts

the control information based on the odometry motion model.

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

Returns:

[delta_rot_1]: Rotation 1 (degrees)

[delta_trans]: Translation (meters)

[delta_rot_2]: Rotation 2 (degrees)

Pseudocode (well, this code probably compiles):

delta_y = cur_pose[1] - prev_pose[1]

delta_x = cur_pose[0] - prev_pose[0]

delta_rot_1 = atan2(delta_y, delta_x)

delta_trans = sqrt(delta_y^2 + delta_y^2)

delta_rot_2 = cur_pose[2] - (prev_pose[2] + delta_rot_1)

"""

return delta_rot_1, delta_trans, delta_rot_2

# In world coordinates

def odom_motion_model(cur_pose, prev_pose, u):

""" Odometry Motion Model

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

u = (rot1, trans, rot2) (float, float, float): A tuple with control data

in the format (rot1, trans, rot2) with units (degrees, meters, degrees)

Returns:

prob [ndarray 20x20x18]: Probability p(x'|x, u)

Pseudocode:

prevX, prevY, prevTh = prev_pose

for x,y,theta in gridPoints: # use fromMap() to get these coords.

# Figure out what each movement would have been to get here

dx = x-prevX; dy = y-prevY; dth = theta-prevTh;

dtrans = sqrt(dx^2+dy^2)

drot1 = atan2(dy,dx)

drot2 = dth - rot1i

pR = gaussian(trans, dtrans, odom_trans_sigma)

pTh1 = gaussian(rot1, drot1, odom_rot_sigma)

pTh2 = gaussian(rot2, drot2, odom_rot_sigma)

pXYT = pR*pTh1*pTh2 # probability we got all three right

prob[indices for x, y, theta] = pXYT # use toMap

"""

return prob

def prediction_step(cur_odom, prev_odom, loc.bel):

""" Prediction step of the Bayes Filter.

Update the probabilities in loc.bel_bar based on loc.bel

from the previous time step and the odometry motion model.

Args:

cur_odom ([Pose]): Current Pose

prev_odom ([Pose]): Previous Pose

loc.bel [ndarray 20x20x18]: Previous location probability density

Returns:

loc.bel_bar [ndarray 20x20x18]: Updated location probability density

Pseudocode:

loc.bel_bar = odom_motion_model(cur_odom, prev_odom)*loc.bel

"""

return loc.bel_bar

def sensor_model(obs, cur_pose):

""" This is the equivalent of p(z|x).

Args:

obs [ndarray]: A 1D array consisting of the measurements made in rotation loop

cur_pose ([Pose]): Current Pose

Returns:

probArray [ndarray]: Returns a 1D array of size 18 (=loc.OBS_PER_CELL) with the likelihood of each individual measurements

Pseudocode:

(x,y,theta) = cur_pose

for i in range(18):

d = getViews(x,y,theta)

probArray[i] = gaussian(obs[i], d, sensor_sigma)

"""

return prob_array

# In configuration space

def update_step(loc.bel_bar, obs):

""" Update step of the Bayes Filter.

Update the probabilities in loc.bel based on loc.bel_bar and the sensor model.

Args:

loc.bel_bar: belief after prediction step

obs [ndarray]: A 1D array consisting of the measurements made in rotation loop

Returns:

loc.bel [ndarray 20x20x18]: belief after update step

Pseudocode:

loc.bel = sensorModel(obs)*loc.bel_bar

eta = 1/sum(loc.bel) # normalization constant

loc.bel = loc.bel*eta

"""

"""

# Pseudocode for navigation with Bayes filter

while True:

next_pose = compute_motion_planning(loc.bel)

u = compute_control(next_pose, prev_pose)

execute_control(u)

cur_pose = get_odometry() # odometry data is available once the robot has moved

loc.bel_bar = prediction_step(cur_pose, prev_pose, loc.bel)

obs = update sensor readings

loc.bel = update_step(loc.bel_bar, obs)

prev_pose = cur_pose

"""

This pseudocode

- Ignores degrees and radians (Python functions use rads, everything else uses degrees)

- Ignores library names

- Uses fake function names to represent things I don’t have worked out yet

- “May throw some exceptions/errors, which is expected”

I have no pictures or recordings for what I did since it’s writing pseudocode. See the function documentation in the Jupyter notebook here on GitHub.

Lab 7b: Procedure

Chose a room to map. Measured and drew a scale drawing of the room; chose three positions from which to generate the map.

Manually (and later, automatically) rotated the robot around and used matplotlib.pyplot.polar() to ensure reasonable results.

Derived the coordinate transformation matrix based on the assumption that the robot turns perfectly about its left wheels.

Took three scans and saved them as .csv files.

Wrote Python code to concatenate them all together and output a bigger .csv which contained all the data. Scatterplotted the data. Manually chose starting and ending points for obstacles and saved two lists as .csv files: StartPoints.csv and EndPoints.csv. Plotted the generated map in the Plotter tool.

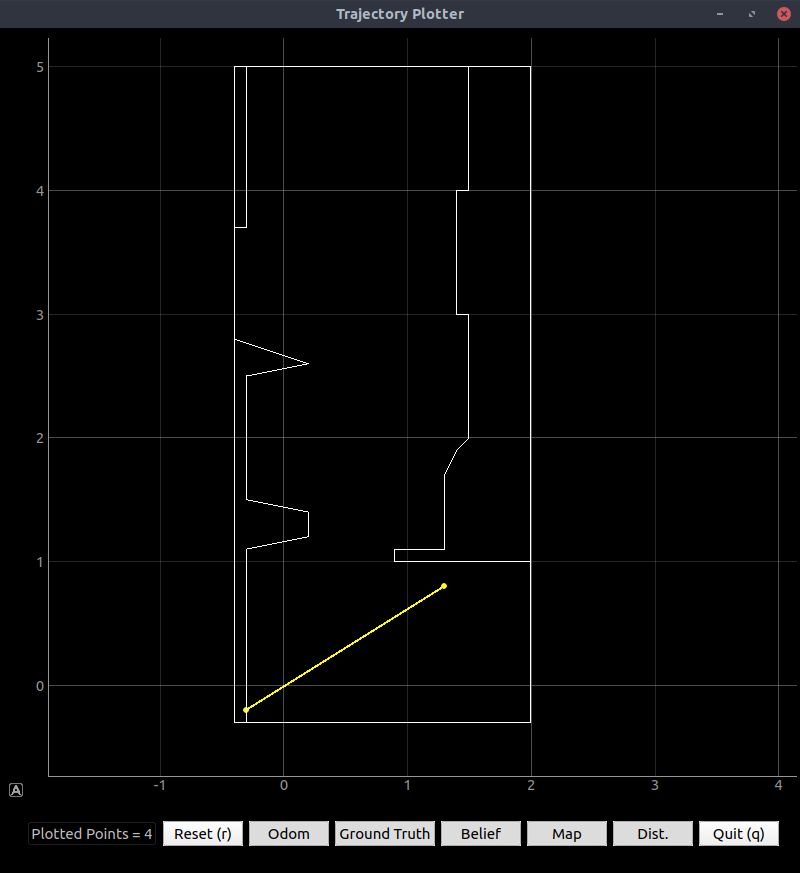

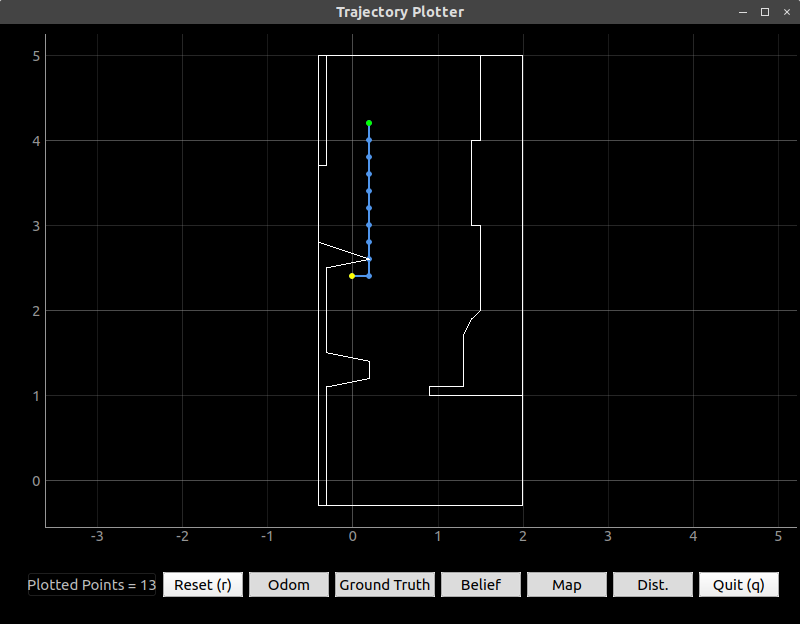

Lab 7b: Results

I chose a kitchen since it had a tile floor rather than carpet. Conveniently, the tiles were exactly 6” by 6” so I was able to precisely (within 1cm) position the robot using the tiles. Note that this is the same surface I used to tune the PID controller and to do most of the other testing.

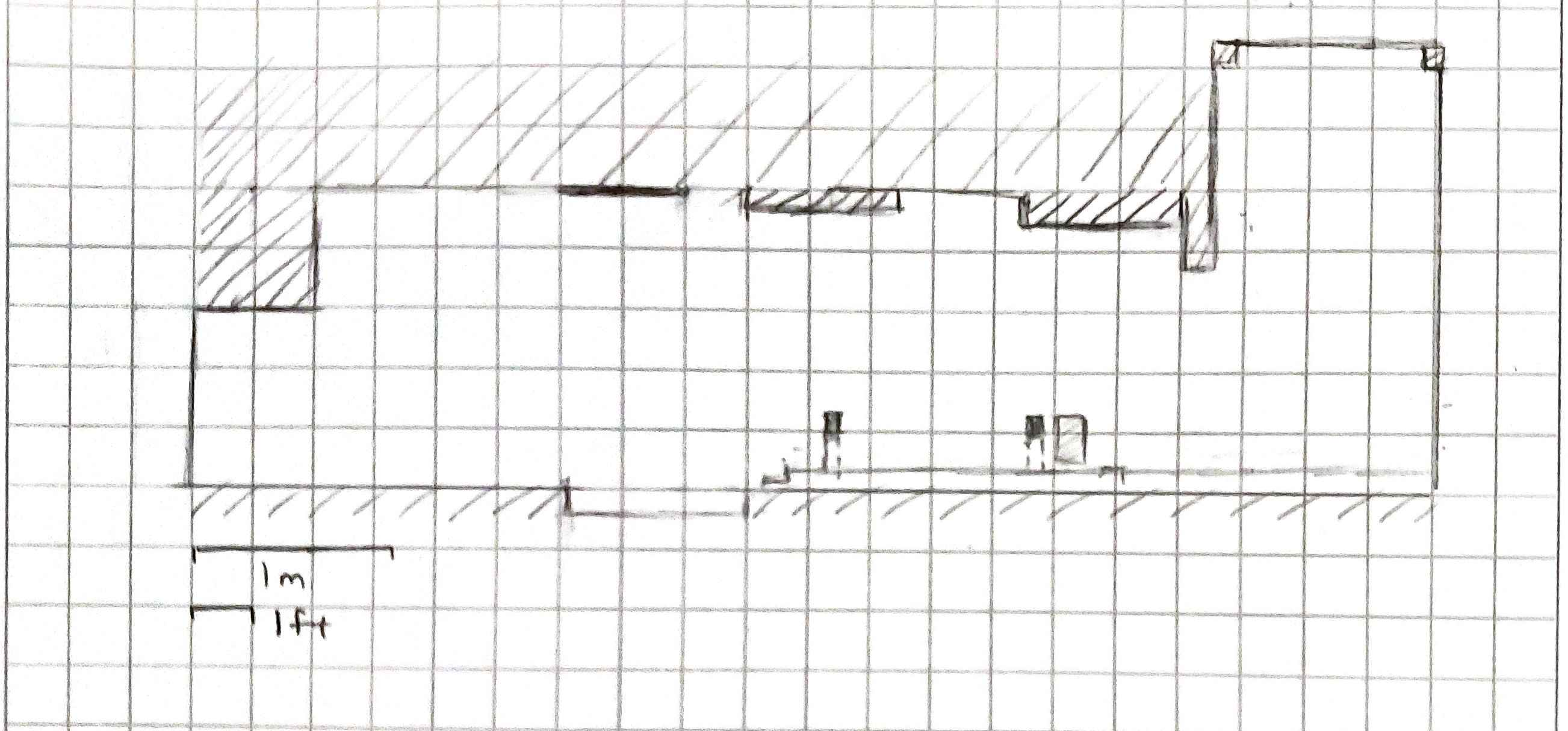

The kitchen was 6m x 3m in its largest dimensions so I added boxes to tell the robot when to stop. Below is a sketch of the kitchen layout. Stools with shiny metal legs were removed.

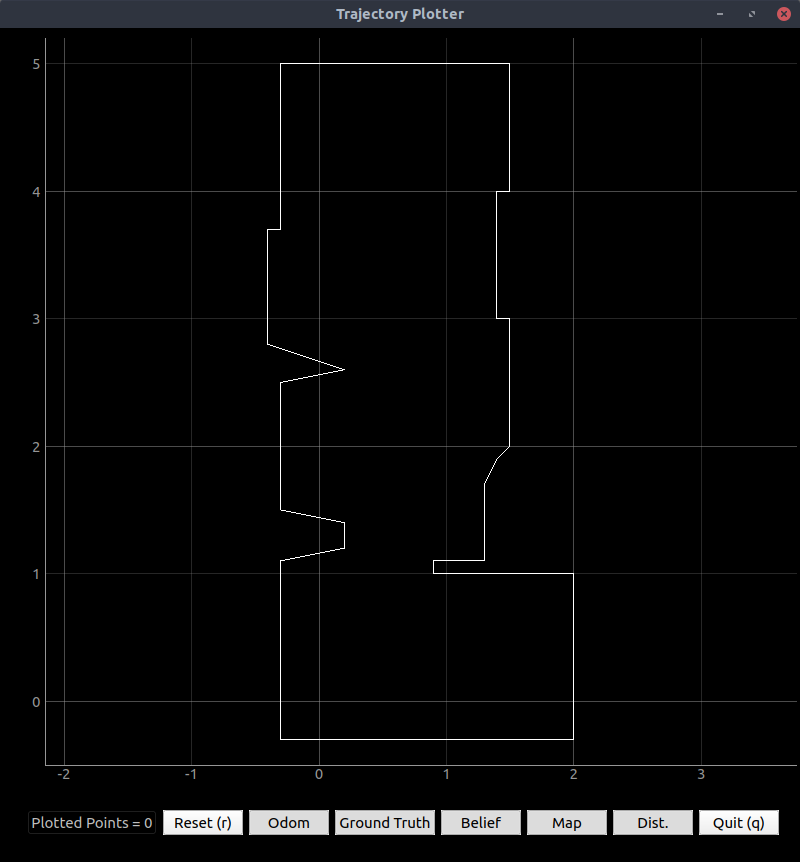

Figure 1. Layout of kitchen with no added obstacles.

Figure 1. Layout of kitchen with no added obstacles.

I added obstacles to one end so that the room appears to be 5m x 3m; this avoids the worry of running out of memory.

Figure 2. Layout of kitchen with added “wall” of boxes, boards, etc.

Figure 2. Layout of kitchen with added “wall” of boxes, boards, etc.

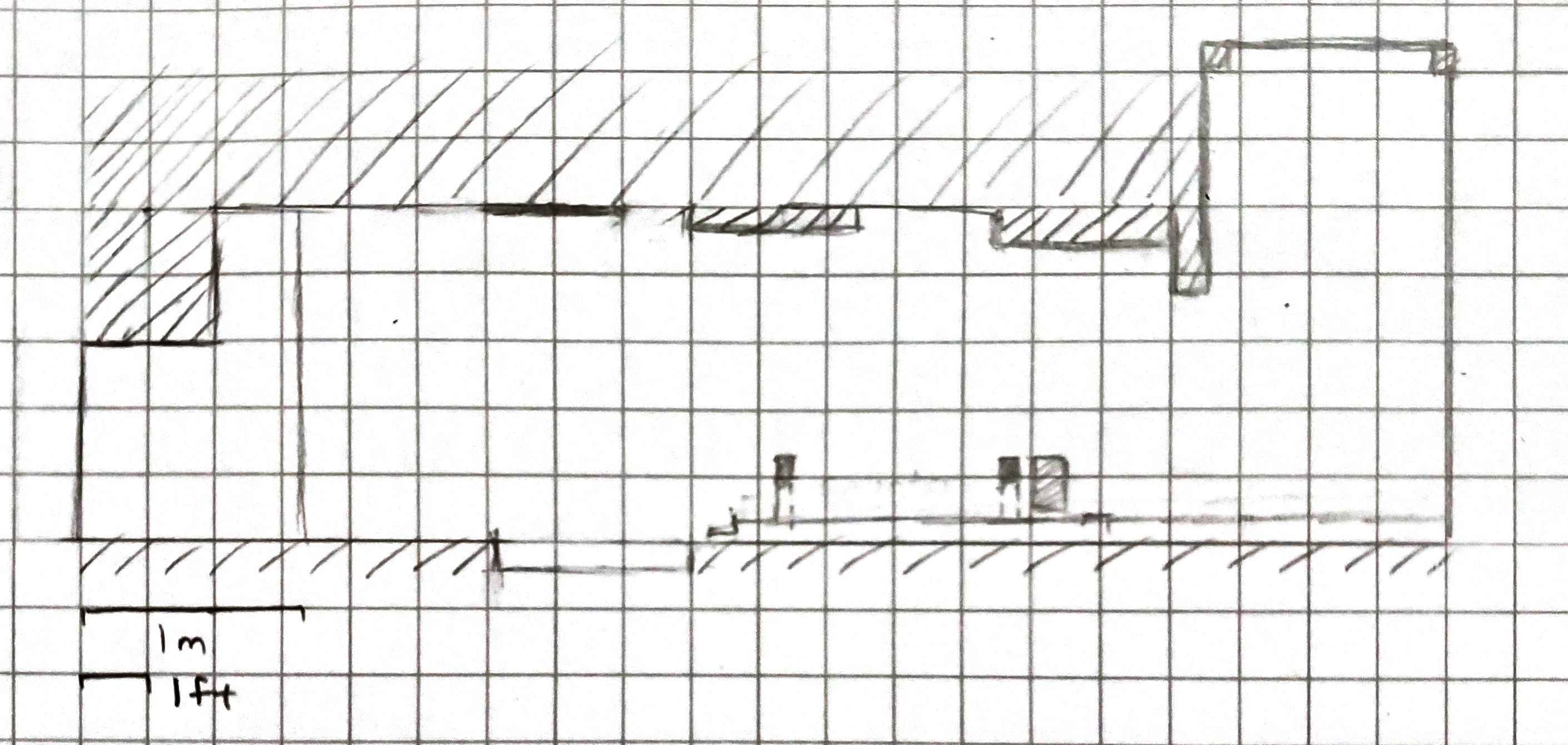

The plot (from the center of the area on the right) looked the way I expected. Note the curvature of corners due to the wide beam, and also the error due to a floor which isn’t totally flat. Significantly, the plot is rotated 90° to the left. I may need to turn my plots around due to drift in the sensor.

Figure 3. Map data acquired by manually turning the robot in place in the lower left-hand corner of the kitchen sketch shown.

Figure 3. Map data acquired by manually turning the robot in place in the lower left-hand corner of the kitchen sketch shown.

Note that the sensor was not calibrated properly, so the map is rotated by about 30°. Also note that the robot did not turn in place (it swing-turned about one side), so the readings will differ by the width of the robot. Also note that the kitchen is open at the jagged part, but the sensor appears to be seeing bumps in the floor instead. I flipped the robot upside-down so that the ToF was elevated farther off the ground. Of course, all the PID tuning was now backwards, so I flipped the directions of the output to compensate for this.

// setDrive(motor, direction, powerLevel)

scmd.setDrive(left, lFwd, output); // formerly lRev

scmd.setDrive(right, rRev, output); // formerly rFwd

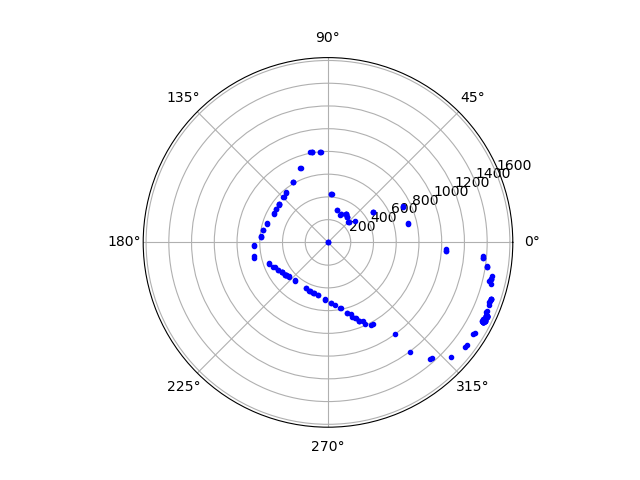

I took three 360° scans, at locations 3.5 × 3.5 tiles; 3.5 × 14.5 tiles; and 3.5 × 24.5 tiles. Thus, the starting coordinates were . All scans started in the center of a tile, facing in the positive x direction. Below is the code I used to process the three scans and generate a single scatterplot. The robot did not spin about its center axis; I incorporated the resulting radial and y offsets into the code.

#!/usr/bin/python3

import numpy as np

import matplotlib.pyplot as pylot

sq2m = 6/39.37 # tile squares to meters conversion

deg2rad = np.pi/180 # degrees to radians conversion

for i in range(3):

filename = "Rotation" + str(i+1) + ".csv"

polar = np.genfromtxt(filename,delimiter=',')

lenCart = len(polar[:,1]) # length of array to be generated

thetas = polar[0:-1,0] # first column

rs = polar[0:-1,1] # second column

cart = np.empty([lenCart,3]) # starting array for Cartesian coordinates

if i==0:

xr = 3.5*sq2m # x offset, m

yr = 3*sq2m # y offset, m (-0.5 for turn radius)

elif i==1:

xr = 3.5*sq2m # x offset, m

yr = 14*sq2m # y offset, m (-0.5 for turn radius)

elif i==2:

xr = 3.5*sq2m # x offset, m

yr = 24*sq2m # y offset, m (-0.5 for turn radius)

xy = np.array([(xr,yr)]).reshape(2,1) # 2x1 column vector

for j in range(lenCart-1):

th = -thetas[j]*deg2rad # angles are in degrees; backwards

d = np.zeros([3,1]) # 3x1 vector for ToF dist. measurement

d[0] = rs[j]/1000 # distances are in mm (first element of d)

d[1] = 0.5 # y offset (distance from center point of arc)

d[2] = 1 # and the last entry should be 1

R = np.array([(np.cos(th), -np.sin(th)),(np.sin(th), np.cos(th))])

T = np.block([[R, xy],[0,0,1]])

Td= np.matmul(T,d) # and the matrix math pays off.

cart[j,:] = Td.reshape(3)

if i==0:

cart1 = cart

elif i==1:

cart2 = cart

elif i==2:

cart3 = cart

cartesianCoords = np.concatenate((cart1,cart2,cart3),axis=0) # looong x,y array

xs = cartesianCoords[:,0]

ys = cartesianCoords[:,1]

fig = pylot.figure()

ax = fig.add_axes([0,0,1,1])

pylot.plot(xs,ys,'g.')

ax.set_xlim(-1,5)

ax.set_ylim(-1,5)

pylot.show()

output = np.vstack([xs,ys]).T

np.savetxt("Points.csv",output,delimiter=",")

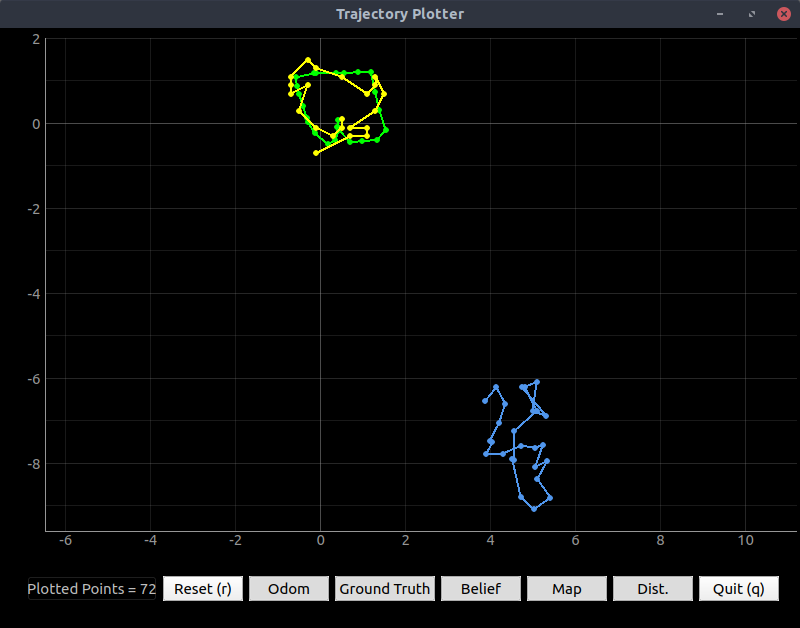

The result (after some improvement from the original) looked like a snowman.

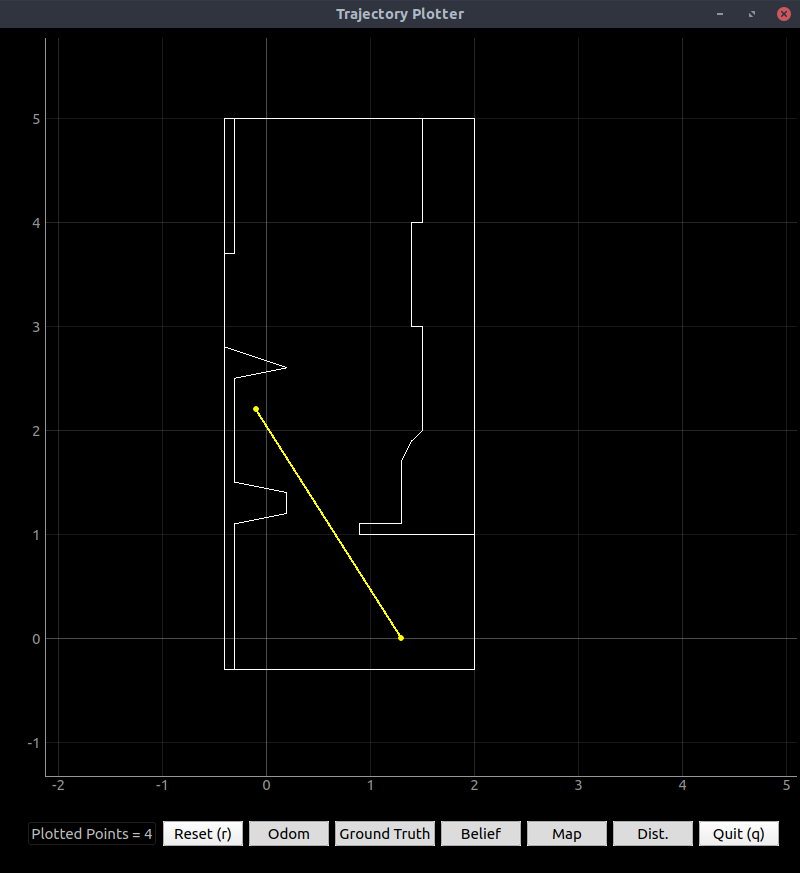

Figure 4. Drift really makes a difference in these slow scans.

Figure 4. Drift really makes a difference in these slow scans.

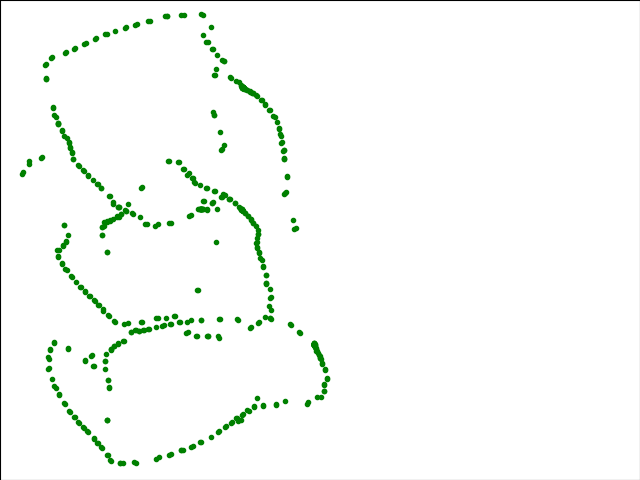

Frustrated though I was that the gyro drift was so bad, I was able to manually generate a Plotter-compatible map. Here is the output from the VM Plotter:

Figure 5. Map plot generated using the robot’s data with heavy reliance on the real map.

Figure 5. Map plot generated using the robot’s data with heavy reliance on the real map.

To achieve this output, I imported CSV files again:

# Start points for each line segment describing the map

start_points = np.genfromtxt("StartPoints.csv", delimiter=",")

# End points for each line segment describing the map

end_points = np.genfromtxt("EndPoints.csv", delimiter=",")

I should note that the number of ToF readings generated from each cycle is much more than 18, but isn’t a constant number. What I intend to do for the next lab is to take the median of all the readings from 10° to 30° and call that the 20° reading; take the median of readings from 30° to 50° and call that the 40° reading; etc. I want to do that because it filters out occasional outliers and is likely to give the actual distance measured at 20° rotation; if something went wrong with that point or the Bluetooth packet was dropped, the median is the next best thing. Mean would not be satisfactory because it would round out corners (such as the wall obstacle and the legs of the kitchen counter) too much. Seeing these features is important for localization.

Note that I collected data and wrote code beyond what is mentioned here; see the GitHub Lab7 folder for the slightly modified PID code, Python code to print r-θ pairs, and more.

Lab 8

Used the same materials as Labs 6 and 7.

Procedure

Downloaded the lab eight base code; extracted; ran setup.sh from the folder; closed and reopened terminal window; and ran jupyter lab from ~/catkin_ws/src/lab8/scripts.

Using pseudocode from Lab 7, wrote the following Bayes Filter code (with debugging print statements removed) in Python 3:

# Import useful Numpy functions

from numpy import arctan2, hypot, pi, deg2rad, rad2deg, arange

# and alias several functions to prevent errors later

odom_rot_sigma = loc.odom_rot_sigma

odom_trans_sigma = loc.odom_trans_sigma

sensor_sigma = loc.sensor_sigma

gaussian = loc.gaussian

normalize_angle = mapper.normalize_angle

to_map = mapper.to_map

toMap = to_map

from_map = mapper.from_map

fromMap = from_map

# In world coordinates

def compute_control(cur_pose, prev_pose):

""" Given the current and previous odometry poses, this function extracts

the control information based on the odometry motion model.

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

Returns:

[delta_rot_1]: Rotation 1 (degrees)

[delta_trans]: Translation (meters)

[delta_rot_2]: Rotation 2 (degrees)

Pseudocode (well, this code probably compiles):

delta_y = cur_pose[1] - prev_pose[1]

delta_x = cur_pose[0] - prev_pose[0]

delta_rot_1 = atan2(delta_y, delta_x)

delta_trans = sqrt(delta_y^2 + delta_y^2)

delta_rot_2 = cur_pose[2] - (prev_pose[2] + delta_rot_1)

"""

dy = cur_pose[1] - prev_pose[1] # pre-compute these since they'll be

dx = cur_pose[0] - prev_pose[0] # used twice

# normalize the change in angle (could be >180°)

dTheta = normalize_angle(cur_pose[2] - prev_pose[2])

delta_rot_1 = rad2deg(arctan2(dy, dx))

delta_trans = hypot(dx, dy) # get magnitude of dx, dy

delta_rot_2 = dTheta - delta_rot_1

return delta_rot_1, delta_trans, delta_rot_2

# In world coordinates

def odom_motion_model(cur_pose, bel, u):

""" Odometry Motion Model

Args:

cur_pose ([Pose]): Current Pose (x,y,th) in meters

bel ([ndarray 20x20x18]): Belief about position from last iteration

u = (rot1, trans, rot2) (float, float, float): A tuple with control data

in the format (rot1, trans, rot2) with units (degrees, meters, degrees)

Returns:

prob [scalar float]: Probability sum over x_{t-1} (p(x'|x, u)) at the cur_pose.

Pseudocode (MODIFIED):

x, y, th = prev_pose

for prevX,prevY,prevTh in gridPoints: # use fromMap() to get these coords.

# Figure out what each movement would have been to get here

dx = x-prevX; dy = y-prevY; dth = theta-prevTh;

dtrans = sqrt(dx^2+dy^2)

drot1 = atan2(dy,dx)

drot2 = dth - rot1i

pR = gaussian(trans, dtrans, odom_trans_sigma)

pTh1 = gaussian(rot1, drot1, odom_rot_sigma)

pTh2 = gaussian(rot2, drot2, odom_rot_sigma)

pXYT = pR*pTh1*pTh2 # probability we got all three right

prob[x,y,th] = prob[x,y,th] + pXYT*bel[prevX,prevY,prevTh]

"""

# Determine what each movement would have been to get here: 20x20x18 array

# We don't know where we are or where we were, but we have

# * A guess for where we are now (cur_pose).

# * A distribution for where we were. Sum over that in this function.

drot1 = np.empty([20,20,1]) # first rotation to travel to point x,y

dtrans = np.empty([20,20,1]) # translation to travel to x,y after rotating

drot2 = np.empty([20,20,18]) # second rotation to achieve angle theta

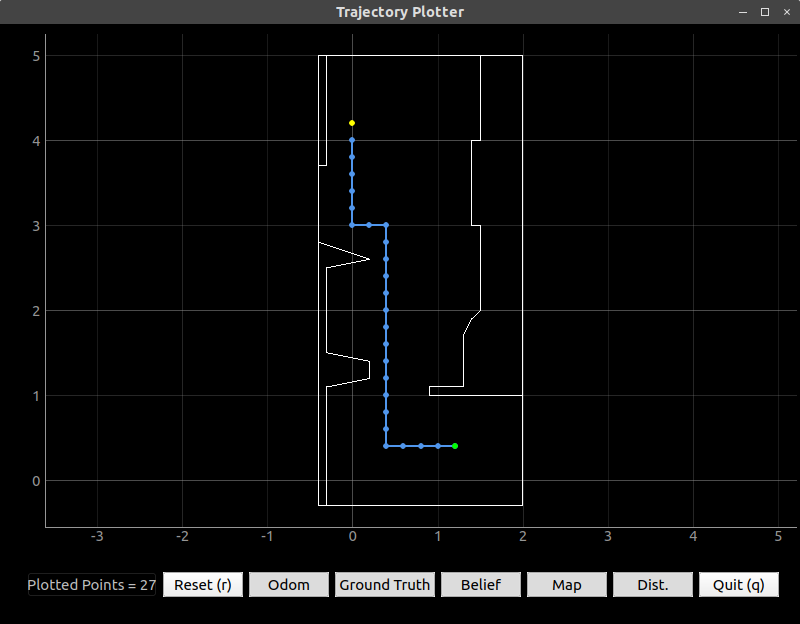

(x,y,th) = mapper.to_map(*cur_pose) # actually the indices for x,y,th